mirror of

https://github.com/router-for-me/CLIProxyAPI.git

synced 2026-02-05 14:00:52 +08:00

Compare commits

24 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7646a2b877 | ||

|

|

62090f2568 | ||

|

|

c281f4cbaf | ||

|

|

09455f9e85 | ||

|

|

c8e72ba0dc | ||

|

|

375ef252ab | ||

|

|

ee552f8720 | ||

|

|

2e88c4858e | ||

|

|

3f50da85c1 | ||

|

|

8be06255f7 | ||

|

|

72274099aa | ||

|

|

dcae098e23 | ||

|

|

2eb05ec640 | ||

|

|

3ce0d76aa4 | ||

|

|

a00b79d9be | ||

|

|

33e53a2a56 | ||

|

|

cd5b80785f | ||

|

|

54f71aa273 | ||

|

|

3f949b7f84 | ||

|

|

443c4538bb | ||

|

|

a7fc2ee4cf | ||

|

|

8e749ac22d | ||

|

|

69e09d9bc7 | ||

|

|

671558a822 |

@@ -13,8 +13,6 @@ Dockerfile

|

||||

docs/*

|

||||

README.md

|

||||

README_CN.md

|

||||

MANAGEMENT_API.md

|

||||

MANAGEMENT_API_CN.md

|

||||

LICENSE

|

||||

|

||||

# Runtime data folders (should be mounted as volumes)

|

||||

@@ -32,3 +30,4 @@ bin/*

|

||||

.agent/*

|

||||

.bmad/*

|

||||

_bmad/*

|

||||

_bmad-output/*

|

||||

|

||||

7

.gitignore

vendored

7

.gitignore

vendored

@@ -11,11 +11,15 @@ bin/*

|

||||

logs/*

|

||||

conv/*

|

||||

temp/*

|

||||

refs/*

|

||||

|

||||

# Storage backends

|

||||

pgstore/*

|

||||

gitstore/*

|

||||

objectstore/*

|

||||

|

||||

# Static assets

|

||||

static/*

|

||||

refs/*

|

||||

|

||||

# Authentication data

|

||||

auths/*

|

||||

@@ -35,6 +39,7 @@ GEMINI.md

|

||||

.agent/*

|

||||

.bmad/*

|

||||

_bmad/*

|

||||

_bmad-output/*

|

||||

|

||||

# macOS

|

||||

.DS_Store

|

||||

|

||||

@@ -10,11 +10,11 @@ So you can use local or multi-account CLI access with OpenAI(include Responses)/

|

||||

|

||||

## Sponsor

|

||||

|

||||

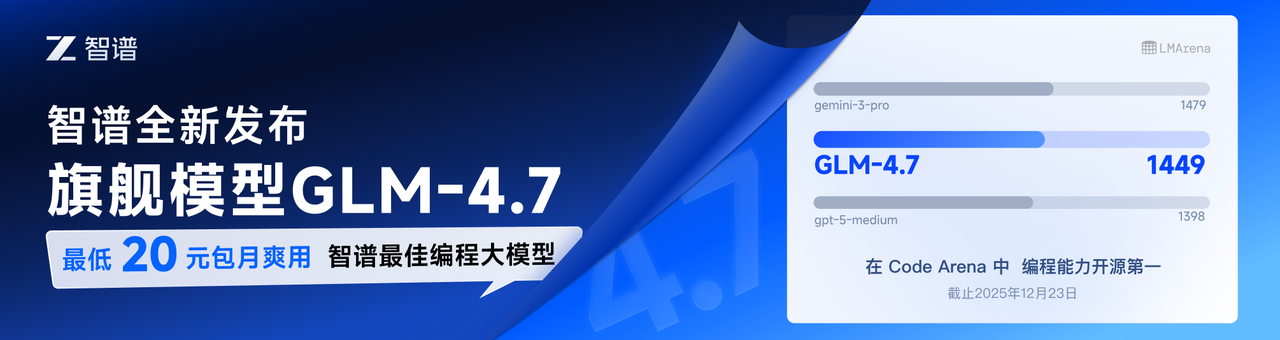

[](https://z.ai/subscribe?ic=8JVLJQFSKB)

|

||||

[](https://z.ai/subscribe?ic=8JVLJQFSKB)

|

||||

|

||||

This project is sponsored by Z.ai, supporting us with their GLM CODING PLAN.

|

||||

|

||||

GLM CODING PLAN is a subscription service designed for AI coding, starting at just $3/month. It provides access to their flagship GLM-4.6 model across 10+ popular AI coding tools (Claude Code, Cline, Roo Code, etc.), offering developers top-tier, fast, and stable coding experiences.

|

||||

GLM CODING PLAN is a subscription service designed for AI coding, starting at just $3/month. It provides access to their flagship GLM-4.7 model across 10+ popular AI coding tools (Claude Code, Cline, Roo Code, etc.), offering developers top-tier, fast, and stable coding experiences.

|

||||

|

||||

Get 10% OFF GLM CODING PLAN:https://z.ai/subscribe?ic=8JVLJQFSKB

|

||||

|

||||

@@ -114,6 +114,10 @@ CLI wrapper for instant switching between multiple Claude accounts and alternati

|

||||

|

||||

Native macOS GUI for managing CLIProxyAPI: configure providers, model mappings, and endpoints via OAuth - no API keys needed.

|

||||

|

||||

### [Quotio](https://github.com/nguyenphutrong/quotio)

|

||||

|

||||

Native macOS menu bar app that unifies Claude, Gemini, OpenAI, Qwen, and Antigravity subscriptions with real-time quota tracking and smart auto-failover for AI coding tools like Claude Code, OpenCode, and Droid - no API keys needed.

|

||||

|

||||

> [!NOTE]

|

||||

> If you developed a project based on CLIProxyAPI, please open a PR to add it to this list.

|

||||

|

||||

|

||||

@@ -10,11 +10,11 @@

|

||||

|

||||

## 赞助商

|

||||

|

||||

[](https://www.bigmodel.cn/claude-code?ic=RRVJPB5SII)

|

||||

[](https://www.bigmodel.cn/claude-code?ic=RRVJPB5SII)

|

||||

|

||||

本项目由 Z智谱 提供赞助, 他们通过 GLM CODING PLAN 对本项目提供技术支持。

|

||||

|

||||

GLM CODING PLAN 是专为AI编码打造的订阅套餐,每月最低仅需20元,即可在十余款主流AI编码工具如 Claude Code、Cline、Roo Code 中畅享智谱旗舰模型GLM-4.6,为开发者提供顶尖的编码体验。

|

||||

GLM CODING PLAN 是专为AI编码打造的订阅套餐,每月最低仅需20元,即可在十余款主流AI编码工具如 Claude Code、Cline、Roo Code 中畅享智谱旗舰模型GLM-4.7,为开发者提供顶尖的编码体验。

|

||||

|

||||

智谱AI为本软件提供了特别优惠,使用以下链接购买可以享受九折优惠:https://www.bigmodel.cn/claude-code?ic=RRVJPB5SII

|

||||

|

||||

@@ -113,6 +113,10 @@ CLI 封装器,用于通过 CLIProxyAPI OAuth 即时切换多个 Claude 账户

|

||||

|

||||

基于 macOS 平台的原生 CLIProxyAPI GUI:配置供应商、模型映射以及OAuth端点,无需 API 密钥。

|

||||

|

||||

### [Quotio](https://github.com/nguyenphutrong/quotio)

|

||||

|

||||

原生 macOS 菜单栏应用,统一管理 Claude、Gemini、OpenAI、Qwen 和 Antigravity 订阅,提供实时配额追踪和智能自动故障转移,支持 Claude Code、OpenCode 和 Droid 等 AI 编程工具,无需 API 密钥。

|

||||

|

||||

> [!NOTE]

|

||||

> 如果你开发了基于 CLIProxyAPI 的项目,请提交一个 PR(拉取请求)将其添加到此列表中。

|

||||

|

||||

|

||||

@@ -39,6 +39,9 @@ api-keys:

|

||||

# Enable debug logging

|

||||

debug: false

|

||||

|

||||

# When true, disable high-overhead HTTP middleware features to reduce per-request memory usage under high concurrency.

|

||||

commercial-mode: false

|

||||

|

||||

# When true, write application logs to rotating files instead of stdout

|

||||

logging-to-file: false

|

||||

|

||||

|

||||

@@ -1,12 +1,25 @@

|

||||

package management

|

||||

|

||||

import (

|

||||

"encoding/json"

|

||||

"net/http"

|

||||

"time"

|

||||

|

||||

"github.com/gin-gonic/gin"

|

||||

"github.com/router-for-me/CLIProxyAPI/v6/internal/usage"

|

||||

)

|

||||

|

||||

type usageExportPayload struct {

|

||||

Version int `json:"version"`

|

||||

ExportedAt time.Time `json:"exported_at"`

|

||||

Usage usage.StatisticsSnapshot `json:"usage"`

|

||||

}

|

||||

|

||||

type usageImportPayload struct {

|

||||

Version int `json:"version"`

|

||||

Usage usage.StatisticsSnapshot `json:"usage"`

|

||||

}

|

||||

|

||||

// GetUsageStatistics returns the in-memory request statistics snapshot.

|

||||

func (h *Handler) GetUsageStatistics(c *gin.Context) {

|

||||

var snapshot usage.StatisticsSnapshot

|

||||

@@ -18,3 +31,49 @@ func (h *Handler) GetUsageStatistics(c *gin.Context) {

|

||||

"failed_requests": snapshot.FailureCount,

|

||||

})

|

||||

}

|

||||

|

||||

// ExportUsageStatistics returns a complete usage snapshot for backup/migration.

|

||||

func (h *Handler) ExportUsageStatistics(c *gin.Context) {

|

||||

var snapshot usage.StatisticsSnapshot

|

||||

if h != nil && h.usageStats != nil {

|

||||

snapshot = h.usageStats.Snapshot()

|

||||

}

|

||||

c.JSON(http.StatusOK, usageExportPayload{

|

||||

Version: 1,

|

||||

ExportedAt: time.Now().UTC(),

|

||||

Usage: snapshot,

|

||||

})

|

||||

}

|

||||

|

||||

// ImportUsageStatistics merges a previously exported usage snapshot into memory.

|

||||

func (h *Handler) ImportUsageStatistics(c *gin.Context) {

|

||||

if h == nil || h.usageStats == nil {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "usage statistics unavailable"})

|

||||

return

|

||||

}

|

||||

|

||||

data, err := c.GetRawData()

|

||||

if err != nil {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "failed to read request body"})

|

||||

return

|

||||

}

|

||||

|

||||

var payload usageImportPayload

|

||||

if err := json.Unmarshal(data, &payload); err != nil {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid json"})

|

||||

return

|

||||

}

|

||||

if payload.Version != 0 && payload.Version != 1 {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "unsupported version"})

|

||||

return

|

||||

}

|

||||

|

||||

result := h.usageStats.MergeSnapshot(payload.Usage)

|

||||

snapshot := h.usageStats.Snapshot()

|

||||

c.JSON(http.StatusOK, gin.H{

|

||||

"added": result.Added,

|

||||

"skipped": result.Skipped,

|

||||

"total_requests": snapshot.TotalRequests,

|

||||

"failed_requests": snapshot.FailureCount,

|

||||

})

|

||||

}

|

||||

|

||||

@@ -209,13 +209,15 @@ func NewServer(cfg *config.Config, authManager *auth.Manager, accessManager *sdk

|

||||

// Resolve logs directory relative to the configuration file directory.

|

||||

var requestLogger logging.RequestLogger

|

||||

var toggle func(bool)

|

||||

if optionState.requestLoggerFactory != nil {

|

||||

requestLogger = optionState.requestLoggerFactory(cfg, configFilePath)

|

||||

}

|

||||

if requestLogger != nil {

|

||||

engine.Use(middleware.RequestLoggingMiddleware(requestLogger))

|

||||

if setter, ok := requestLogger.(interface{ SetEnabled(bool) }); ok {

|

||||

toggle = setter.SetEnabled

|

||||

if !cfg.CommercialMode {

|

||||

if optionState.requestLoggerFactory != nil {

|

||||

requestLogger = optionState.requestLoggerFactory(cfg, configFilePath)

|

||||

}

|

||||

if requestLogger != nil {

|

||||

engine.Use(middleware.RequestLoggingMiddleware(requestLogger))

|

||||

if setter, ok := requestLogger.(interface{ SetEnabled(bool) }); ok {

|

||||

toggle = setter.SetEnabled

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -474,6 +476,8 @@ func (s *Server) registerManagementRoutes() {

|

||||

mgmt.Use(s.managementAvailabilityMiddleware(), s.mgmt.Middleware())

|

||||

{

|

||||

mgmt.GET("/usage", s.mgmt.GetUsageStatistics)

|

||||

mgmt.GET("/usage/export", s.mgmt.ExportUsageStatistics)

|

||||

mgmt.POST("/usage/import", s.mgmt.ImportUsageStatistics)

|

||||

mgmt.GET("/config", s.mgmt.GetConfig)

|

||||

mgmt.GET("/config.yaml", s.mgmt.GetConfigYAML)

|

||||

mgmt.PUT("/config.yaml", s.mgmt.PutConfigYAML)

|

||||

|

||||

@@ -39,6 +39,9 @@ type Config struct {

|

||||

// Debug enables or disables debug-level logging and other debug features.

|

||||

Debug bool `yaml:"debug" json:"debug"`

|

||||

|

||||

// CommercialMode disables high-overhead HTTP middleware features to minimize per-request memory usage.

|

||||

CommercialMode bool `yaml:"commercial-mode" json:"commercial-mode"`

|

||||

|

||||

// LoggingToFile controls whether application logs are written to rotating files or stdout.

|

||||

LoggingToFile bool `yaml:"logging-to-file" json:"logging-to-file"`

|

||||

|

||||

@@ -814,8 +817,8 @@ func getOrCreateMapValue(mapNode *yaml.Node, key string) *yaml.Node {

|

||||

}

|

||||

|

||||

// mergeMappingPreserve merges keys from src into dst mapping node while preserving

|

||||

// key order and comments of existing keys in dst. Unknown keys from src are appended

|

||||

// to dst at the end, copying their node structure from src.

|

||||

// key order and comments of existing keys in dst. New keys are only added if their

|

||||

// value is non-zero to avoid polluting the config with defaults.

|

||||

func mergeMappingPreserve(dst, src *yaml.Node) {

|

||||

if dst == nil || src == nil {

|

||||

return

|

||||

@@ -826,20 +829,19 @@ func mergeMappingPreserve(dst, src *yaml.Node) {

|

||||

copyNodeShallow(dst, src)

|

||||

return

|

||||

}

|

||||

// Build a lookup of existing keys in dst

|

||||

for i := 0; i+1 < len(src.Content); i += 2 {

|

||||

sk := src.Content[i]

|

||||

sv := src.Content[i+1]

|

||||

idx := findMapKeyIndex(dst, sk.Value)

|

||||

if idx >= 0 {

|

||||

// Merge into existing value node

|

||||

// Merge into existing value node (always update, even to zero values)

|

||||

dv := dst.Content[idx+1]

|

||||

mergeNodePreserve(dv, sv)

|

||||

} else {

|

||||

if shouldSkipEmptyCollectionOnPersist(sk.Value, sv) {

|

||||

// New key: only add if value is non-zero to avoid polluting config with defaults

|

||||

if isZeroValueNode(sv) {

|

||||

continue

|

||||

}

|

||||

// Append new key/value pair by deep-copying from src

|

||||

dst.Content = append(dst.Content, deepCopyNode(sk), deepCopyNode(sv))

|

||||

}

|

||||

}

|

||||

@@ -922,32 +924,49 @@ func findMapKeyIndex(mapNode *yaml.Node, key string) int {

|

||||

return -1

|

||||

}

|

||||

|

||||

func shouldSkipEmptyCollectionOnPersist(key string, node *yaml.Node) bool {

|

||||

switch key {

|

||||

case "generative-language-api-key",

|

||||

"gemini-api-key",

|

||||

"vertex-api-key",

|

||||

"claude-api-key",

|

||||

"codex-api-key",

|

||||

"openai-compatibility":

|

||||

return isEmptyCollectionNode(node)

|

||||

default:

|

||||

return false

|

||||

}

|

||||

}

|

||||

|

||||

func isEmptyCollectionNode(node *yaml.Node) bool {

|

||||

// isZeroValueNode returns true if the YAML node represents a zero/default value

|

||||

// that should not be written as a new key to preserve config cleanliness.

|

||||

// For mappings and sequences, recursively checks if all children are zero values.

|

||||

func isZeroValueNode(node *yaml.Node) bool {

|

||||

if node == nil {

|

||||

return true

|

||||

}

|

||||

switch node.Kind {

|

||||

case yaml.SequenceNode:

|

||||

return len(node.Content) == 0

|

||||

case yaml.ScalarNode:

|

||||

return node.Tag == "!!null"

|

||||

default:

|

||||

return false

|

||||

switch node.Tag {

|

||||

case "!!bool":

|

||||

return node.Value == "false"

|

||||

case "!!int", "!!float":

|

||||

return node.Value == "0" || node.Value == "0.0"

|

||||

case "!!str":

|

||||

return node.Value == ""

|

||||

case "!!null":

|

||||

return true

|

||||

}

|

||||

case yaml.SequenceNode:

|

||||

if len(node.Content) == 0 {

|

||||

return true

|

||||

}

|

||||

// Check if all elements are zero values

|

||||

for _, child := range node.Content {

|

||||

if !isZeroValueNode(child) {

|

||||

return false

|

||||

}

|

||||

}

|

||||

return true

|

||||

case yaml.MappingNode:

|

||||

if len(node.Content) == 0 {

|

||||

return true

|

||||

}

|

||||

// Check if all values are zero values (values are at odd indices)

|

||||

for i := 1; i < len(node.Content); i += 2 {

|

||||

if !isZeroValueNode(node.Content[i]) {

|

||||

return false

|

||||

}

|

||||

}

|

||||

return true

|

||||

}

|

||||

return false

|

||||

}

|

||||

|

||||

// deepCopyNode creates a deep copy of a yaml.Node graph.

|

||||

|

||||

@@ -30,13 +30,13 @@ type SDKConfig struct {

|

||||

// StreamingConfig holds server streaming behavior configuration.

|

||||

type StreamingConfig struct {

|

||||

// KeepAliveSeconds controls how often the server emits SSE heartbeats (": keep-alive\n\n").

|

||||

// nil means default (15 seconds). <= 0 disables keep-alives.

|

||||

KeepAliveSeconds *int `yaml:"keepalive-seconds,omitempty" json:"keepalive-seconds,omitempty"`

|

||||

// <= 0 disables keep-alives. Default is 0.

|

||||

KeepAliveSeconds int `yaml:"keepalive-seconds,omitempty" json:"keepalive-seconds,omitempty"`

|

||||

|

||||

// BootstrapRetries controls how many times the server may retry a streaming request before any bytes are sent,

|

||||

// to allow auth rotation / transient recovery.

|

||||

// nil means default (2). 0 disables bootstrap retries.

|

||||

BootstrapRetries *int `yaml:"bootstrap-retries,omitempty" json:"bootstrap-retries,omitempty"`

|

||||

// <= 0 disables bootstrap retries. Default is 0.

|

||||

BootstrapRetries int `yaml:"bootstrap-retries,omitempty" json:"bootstrap-retries,omitempty"`

|

||||

}

|

||||

|

||||

// AccessConfig groups request authentication providers.

|

||||

|

||||

@@ -19,7 +19,7 @@ type usageReporter struct {

|

||||

provider string

|

||||

model string

|

||||

authID string

|

||||

authIndex uint64

|

||||

authIndex string

|

||||

apiKey string

|

||||

source string

|

||||

requestedAt time.Time

|

||||

@@ -482,12 +482,16 @@ func StripUsageMetadataFromJSON(rawJSON []byte) ([]byte, bool) {

|

||||

cleaned := jsonBytes

|

||||

var changed bool

|

||||

|

||||

if gjson.GetBytes(cleaned, "usageMetadata").Exists() {

|

||||

if usageMetadata = gjson.GetBytes(cleaned, "usageMetadata"); usageMetadata.Exists() {

|

||||

// Rename usageMetadata to cpaUsageMetadata in the message_start event of Claude

|

||||

cleaned, _ = sjson.SetRawBytes(cleaned, "cpaUsageMetadata", []byte(usageMetadata.Raw))

|

||||

cleaned, _ = sjson.DeleteBytes(cleaned, "usageMetadata")

|

||||

changed = true

|

||||

}

|

||||

|

||||

if gjson.GetBytes(cleaned, "response.usageMetadata").Exists() {

|

||||

if usageMetadata = gjson.GetBytes(cleaned, "response.usageMetadata"); usageMetadata.Exists() {

|

||||

// Rename usageMetadata to cpaUsageMetadata in the message_start event of Claude

|

||||

cleaned, _ = sjson.SetRawBytes(cleaned, "response.cpaUsageMetadata", []byte(usageMetadata.Raw))

|

||||

cleaned, _ = sjson.DeleteBytes(cleaned, "response.usageMetadata")

|

||||

changed = true

|

||||

}

|

||||

|

||||

@@ -99,6 +99,14 @@ func ConvertAntigravityResponseToClaude(_ context.Context, _ string, originalReq

|

||||

// This follows the Claude Code API specification for streaming message initialization

|

||||

messageStartTemplate := `{"type": "message_start", "message": {"id": "msg_1nZdL29xx5MUA1yADyHTEsnR8uuvGzszyY", "type": "message", "role": "assistant", "content": [], "model": "claude-3-5-sonnet-20241022", "stop_reason": null, "stop_sequence": null, "usage": {"input_tokens": 0, "output_tokens": 0}}}`

|

||||

|

||||

// Use cpaUsageMetadata within the message_start event for Claude.

|

||||

if promptTokenCount := gjson.GetBytes(rawJSON, "response.cpaUsageMetadata.promptTokenCount"); promptTokenCount.Exists() {

|

||||

messageStartTemplate, _ = sjson.Set(messageStartTemplate, "message.usage.input_tokens", promptTokenCount.Int())

|

||||

}

|

||||

if candidatesTokenCount := gjson.GetBytes(rawJSON, "response.cpaUsageMetadata.candidatesTokenCount"); candidatesTokenCount.Exists() {

|

||||

messageStartTemplate, _ = sjson.Set(messageStartTemplate, "message.usage.output_tokens", candidatesTokenCount.Int())

|

||||

}

|

||||

|

||||

// Override default values with actual response metadata if available from the Gemini CLI response

|

||||

if modelVersionResult := gjson.GetBytes(rawJSON, "response.modelVersion"); modelVersionResult.Exists() {

|

||||

messageStartTemplate, _ = sjson.Set(messageStartTemplate, "message.model", modelVersionResult.String())

|

||||

|

||||

@@ -247,10 +247,30 @@ func ConvertOpenAIRequestToAntigravity(modelName string, inputRawJSON []byte, _

|

||||

} else if role == "assistant" {

|

||||

node := []byte(`{"role":"model","parts":[]}`)

|

||||

p := 0

|

||||

if content.Type == gjson.String {

|

||||

if content.Type == gjson.String && content.String() != "" {

|

||||

node, _ = sjson.SetBytes(node, "parts.-1.text", content.String())

|

||||

out, _ = sjson.SetRawBytes(out, "request.contents.-1", node)

|

||||

p++

|

||||

} else if content.IsArray() {

|

||||

// Assistant multimodal content (e.g. text + image) -> single model content with parts

|

||||

for _, item := range content.Array() {

|

||||

switch item.Get("type").String() {

|

||||

case "text":

|

||||

p++

|

||||

case "image_url":

|

||||

// If the assistant returned an inline data URL, preserve it for history fidelity.

|

||||

imageURL := item.Get("image_url.url").String()

|

||||

if len(imageURL) > 5 { // expect data:...

|

||||

pieces := strings.SplitN(imageURL[5:], ";", 2)

|

||||

if len(pieces) == 2 && len(pieces[1]) > 7 {

|

||||

mime := pieces[0]

|

||||

data := pieces[1][7:]

|

||||

node, _ = sjson.SetBytes(node, "parts."+itoa(p)+".inlineData.mime_type", mime)

|

||||

node, _ = sjson.SetBytes(node, "parts."+itoa(p)+".inlineData.data", data)

|

||||

p++

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// Tool calls -> single model content with functionCall parts

|

||||

@@ -305,6 +325,8 @@ func ConvertOpenAIRequestToAntigravity(modelName string, inputRawJSON []byte, _

|

||||

if pp > 0 {

|

||||

out, _ = sjson.SetRawBytes(out, "request.contents.-1", toolNode)

|

||||

}

|

||||

} else {

|

||||

out, _ = sjson.SetRawBytes(out, "request.contents.-1", node)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -181,12 +181,14 @@ func ConvertAntigravityResponseToOpenAI(_ context.Context, _ string, originalReq

|

||||

mimeType = "image/png"

|

||||

}

|

||||

imageURL := fmt.Sprintf("data:%s;base64,%s", mimeType, data)

|

||||

imagePayload := `{"image_url":{"url":""},"type":"image_url"}`

|

||||

imagePayload, _ = sjson.Set(imagePayload, "image_url.url", imageURL)

|

||||

imagesResult := gjson.Get(template, "choices.0.delta.images")

|

||||

if !imagesResult.Exists() || !imagesResult.IsArray() {

|

||||

template, _ = sjson.SetRaw(template, "choices.0.delta.images", `[]`)

|

||||

}

|

||||

imageIndex := len(gjson.Get(template, "choices.0.delta.images").Array())

|

||||

imagePayload := `{"type":"image_url","image_url":{"url":""}}`

|

||||

imagePayload, _ = sjson.Set(imagePayload, "index", imageIndex)

|

||||

imagePayload, _ = sjson.Set(imagePayload, "image_url.url", imageURL)

|

||||

template, _ = sjson.Set(template, "choices.0.delta.role", "assistant")

|

||||

template, _ = sjson.SetRaw(template, "choices.0.delta.images.-1", imagePayload)

|

||||

}

|

||||

|

||||

@@ -205,9 +205,12 @@ func ConvertClaudeResponseToOpenAI(_ context.Context, modelName string, original

|

||||

if usage := root.Get("usage"); usage.Exists() {

|

||||

inputTokens := usage.Get("input_tokens").Int()

|

||||

outputTokens := usage.Get("output_tokens").Int()

|

||||

template, _ = sjson.Set(template, "usage.prompt_tokens", inputTokens)

|

||||

cacheReadInputTokens := usage.Get("cache_read_input_tokens").Int()

|

||||

cacheCreationInputTokens := usage.Get("cache_creation_input_tokens").Int()

|

||||

template, _ = sjson.Set(template, "usage.prompt_tokens", inputTokens+cacheCreationInputTokens)

|

||||

template, _ = sjson.Set(template, "usage.completion_tokens", outputTokens)

|

||||

template, _ = sjson.Set(template, "usage.total_tokens", inputTokens+outputTokens)

|

||||

template, _ = sjson.Set(template, "usage.prompt_tokens_details.cached_tokens", cacheReadInputTokens)

|

||||

}

|

||||

return []string{template}

|

||||

|

||||

@@ -281,8 +284,6 @@ func ConvertClaudeResponseToOpenAINonStream(_ context.Context, _ string, origina

|

||||

var messageID string

|

||||

var model string

|

||||

var createdAt int64

|

||||

var inputTokens, outputTokens int64

|

||||

var reasoningTokens int64

|

||||

var stopReason string

|

||||

var contentParts []string

|

||||

var reasoningParts []string

|

||||

@@ -299,9 +300,6 @@ func ConvertClaudeResponseToOpenAINonStream(_ context.Context, _ string, origina

|

||||

messageID = message.Get("id").String()

|

||||

model = message.Get("model").String()

|

||||

createdAt = time.Now().Unix()

|

||||

if usage := message.Get("usage"); usage.Exists() {

|

||||

inputTokens = usage.Get("input_tokens").Int()

|

||||

}

|

||||

}

|

||||

|

||||

case "content_block_start":

|

||||

@@ -364,11 +362,14 @@ func ConvertClaudeResponseToOpenAINonStream(_ context.Context, _ string, origina

|

||||

}

|

||||

}

|

||||

if usage := root.Get("usage"); usage.Exists() {

|

||||

outputTokens = usage.Get("output_tokens").Int()

|

||||

// Estimate reasoning tokens from accumulated thinking content

|

||||

if len(reasoningParts) > 0 {

|

||||

reasoningTokens = int64(len(strings.Join(reasoningParts, "")) / 4) // Rough estimation

|

||||

}

|

||||

inputTokens := usage.Get("input_tokens").Int()

|

||||

outputTokens := usage.Get("output_tokens").Int()

|

||||

cacheReadInputTokens := usage.Get("cache_read_input_tokens").Int()

|

||||

cacheCreationInputTokens := usage.Get("cache_creation_input_tokens").Int()

|

||||

out, _ = sjson.Set(out, "usage.prompt_tokens", inputTokens+cacheCreationInputTokens)

|

||||

out, _ = sjson.Set(out, "usage.completion_tokens", outputTokens)

|

||||

out, _ = sjson.Set(out, "usage.total_tokens", inputTokens+outputTokens)

|

||||

out, _ = sjson.Set(out, "usage.prompt_tokens_details.cached_tokens", cacheReadInputTokens)

|

||||

}

|

||||

}

|

||||

}

|

||||

@@ -427,16 +428,5 @@ func ConvertClaudeResponseToOpenAINonStream(_ context.Context, _ string, origina

|

||||

out, _ = sjson.Set(out, "choices.0.finish_reason", mapAnthropicStopReasonToOpenAI(stopReason))

|

||||

}

|

||||

|

||||

// Set usage information including prompt tokens, completion tokens, and total tokens

|

||||

totalTokens := inputTokens + outputTokens

|

||||

out, _ = sjson.Set(out, "usage.prompt_tokens", inputTokens)

|

||||

out, _ = sjson.Set(out, "usage.completion_tokens", outputTokens)

|

||||

out, _ = sjson.Set(out, "usage.total_tokens", totalTokens)

|

||||

|

||||

// Add reasoning tokens to usage details if any reasoning content was processed

|

||||

if reasoningTokens > 0 {

|

||||

out, _ = sjson.Set(out, "usage.completion_tokens_details.reasoning_tokens", reasoningTokens)

|

||||

}

|

||||

|

||||

return out

|

||||

}

|

||||

|

||||

@@ -114,13 +114,16 @@ func ConvertOpenAIResponsesRequestToClaude(modelName string, inputRawJSON []byte

|

||||

var builder strings.Builder

|

||||

if parts := item.Get("content"); parts.Exists() && parts.IsArray() {

|

||||

parts.ForEach(func(_, part gjson.Result) bool {

|

||||

text := part.Get("text").String()

|

||||

textResult := part.Get("text")

|

||||

text := textResult.String()

|

||||

if builder.Len() > 0 && text != "" {

|

||||

builder.WriteByte('\n')

|

||||

}

|

||||

builder.WriteString(text)

|

||||

return true

|

||||

})

|

||||

} else if parts.Type == gjson.String {

|

||||

builder.WriteString(parts.String())

|

||||

}

|

||||

instructionsText = builder.String()

|

||||

if instructionsText != "" {

|

||||

@@ -207,6 +210,8 @@ func ConvertOpenAIResponsesRequestToClaude(modelName string, inputRawJSON []byte

|

||||

}

|

||||

return true

|

||||

})

|

||||

} else if parts.Type == gjson.String {

|

||||

textAggregate.WriteString(parts.String())

|

||||

}

|

||||

|

||||

// Fallback to given role if content types not decisive

|

||||

|

||||

@@ -218,8 +218,29 @@ func ConvertOpenAIRequestToGeminiCLI(modelName string, inputRawJSON []byte, _ bo

|

||||

if content.Type == gjson.String {

|

||||

// Assistant text -> single model content

|

||||

node, _ = sjson.SetBytes(node, "parts.-1.text", content.String())

|

||||

out, _ = sjson.SetRawBytes(out, "request.contents.-1", node)

|

||||

p++

|

||||

} else if content.IsArray() {

|

||||

// Assistant multimodal content (e.g. text + image) -> single model content with parts

|

||||

for _, item := range content.Array() {

|

||||

switch item.Get("type").String() {

|

||||

case "text":

|

||||

node, _ = sjson.SetBytes(node, "parts."+itoa(p)+".text", item.Get("text").String())

|

||||

p++

|

||||

case "image_url":

|

||||

// If the assistant returned an inline data URL, preserve it for history fidelity.

|

||||

imageURL := item.Get("image_url.url").String()

|

||||

if len(imageURL) > 5 { // expect data:...

|

||||

pieces := strings.SplitN(imageURL[5:], ";", 2)

|

||||

if len(pieces) == 2 && len(pieces[1]) > 7 {

|

||||

mime := pieces[0]

|

||||

data := pieces[1][7:]

|

||||

node, _ = sjson.SetBytes(node, "parts."+itoa(p)+".inlineData.mime_type", mime)

|

||||

node, _ = sjson.SetBytes(node, "parts."+itoa(p)+".inlineData.data", data)

|

||||

p++

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// Tool calls -> single model content with functionCall parts

|

||||

@@ -260,6 +281,8 @@ func ConvertOpenAIRequestToGeminiCLI(modelName string, inputRawJSON []byte, _ bo

|

||||

if pp > 0 {

|

||||

out, _ = sjson.SetRawBytes(out, "request.contents.-1", toolNode)

|

||||

}

|

||||

} else {

|

||||

out, _ = sjson.SetRawBytes(out, "request.contents.-1", node)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -170,12 +170,14 @@ func ConvertCliResponseToOpenAI(_ context.Context, _ string, originalRequestRawJ

|

||||

mimeType = "image/png"

|

||||

}

|

||||

imageURL := fmt.Sprintf("data:%s;base64,%s", mimeType, data)

|

||||

imagePayload := `{"image_url":{"url":""},"type":"image_url"}`

|

||||

imagePayload, _ = sjson.Set(imagePayload, "image_url.url", imageURL)

|

||||

imagesResult := gjson.Get(template, "choices.0.delta.images")

|

||||

if !imagesResult.Exists() || !imagesResult.IsArray() {

|

||||

template, _ = sjson.SetRaw(template, "choices.0.delta.images", `[]`)

|

||||

}

|

||||

imageIndex := len(gjson.Get(template, "choices.0.delta.images").Array())

|

||||

imagePayload := `{"type":"image_url","image_url":{"url":""}}`

|

||||

imagePayload, _ = sjson.Set(imagePayload, "index", imageIndex)

|

||||

imagePayload, _ = sjson.Set(imagePayload, "image_url.url", imageURL)

|

||||

template, _ = sjson.Set(template, "choices.0.delta.role", "assistant")

|

||||

template, _ = sjson.SetRaw(template, "choices.0.delta.images.-1", imagePayload)

|

||||

}

|

||||

|

||||

@@ -233,18 +233,15 @@ func ConvertOpenAIRequestToGemini(modelName string, inputRawJSON []byte, _ bool)

|

||||

} else if role == "assistant" {

|

||||

node := []byte(`{"role":"model","parts":[]}`)

|

||||

p := 0

|

||||

|

||||

if content.Type == gjson.String {

|

||||

// Assistant text -> single model content

|

||||

node, _ = sjson.SetBytes(node, "parts.-1.text", content.String())

|

||||

out, _ = sjson.SetRawBytes(out, "contents.-1", node)

|

||||

p++

|

||||

} else if content.IsArray() {

|

||||

// Assistant multimodal content (e.g. text + image) -> single model content with parts

|

||||

for _, item := range content.Array() {

|

||||

switch item.Get("type").String() {

|

||||

case "text":

|

||||

node, _ = sjson.SetBytes(node, "parts."+itoa(p)+".text", item.Get("text").String())

|

||||

p++

|

||||

case "image_url":

|

||||

// If the assistant returned an inline data URL, preserve it for history fidelity.

|

||||

@@ -261,7 +258,6 @@ func ConvertOpenAIRequestToGemini(modelName string, inputRawJSON []byte, _ bool)

|

||||

}

|

||||

}

|

||||

}

|

||||

out, _ = sjson.SetRawBytes(out, "contents.-1", node)

|

||||

}

|

||||

|

||||

// Tool calls -> single model content with functionCall parts

|

||||

@@ -302,6 +298,8 @@ func ConvertOpenAIRequestToGemini(modelName string, inputRawJSON []byte, _ bool)

|

||||

if pp > 0 {

|

||||

out, _ = sjson.SetRawBytes(out, "contents.-1", toolNode)

|

||||

}

|

||||

} else {

|

||||

out, _ = sjson.SetRawBytes(out, "contents.-1", node)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -182,12 +182,14 @@ func ConvertGeminiResponseToOpenAI(_ context.Context, _ string, originalRequestR

|

||||

mimeType = "image/png"

|

||||

}

|

||||

imageURL := fmt.Sprintf("data:%s;base64,%s", mimeType, data)

|

||||

imagePayload := `{"image_url":{"url":""},"type":"image_url"}`

|

||||

imagePayload, _ = sjson.Set(imagePayload, "image_url.url", imageURL)

|

||||

imagesResult := gjson.Get(template, "choices.0.delta.images")

|

||||

if !imagesResult.Exists() || !imagesResult.IsArray() {

|

||||

template, _ = sjson.SetRaw(template, "choices.0.delta.images", `[]`)

|

||||

}

|

||||

imageIndex := len(gjson.Get(template, "choices.0.delta.images").Array())

|

||||

imagePayload := `{"type":"image_url","image_url":{"url":""}}`

|

||||

imagePayload, _ = sjson.Set(imagePayload, "index", imageIndex)

|

||||

imagePayload, _ = sjson.Set(imagePayload, "image_url.url", imageURL)

|

||||

template, _ = sjson.Set(template, "choices.0.delta.role", "assistant")

|

||||

template, _ = sjson.SetRaw(template, "choices.0.delta.images.-1", imagePayload)

|

||||

}

|

||||

@@ -316,12 +318,14 @@ func ConvertGeminiResponseToOpenAINonStream(_ context.Context, _ string, origina

|

||||

mimeType = "image/png"

|

||||

}

|

||||

imageURL := fmt.Sprintf("data:%s;base64,%s", mimeType, data)

|

||||

imagePayload := `{"image_url":{"url":""},"type":"image_url"}`

|

||||

imagePayload, _ = sjson.Set(imagePayload, "image_url.url", imageURL)

|

||||

imagesResult := gjson.Get(template, "choices.0.message.images")

|

||||

if !imagesResult.Exists() || !imagesResult.IsArray() {

|

||||

template, _ = sjson.SetRaw(template, "choices.0.message.images", `[]`)

|

||||

}

|

||||

imageIndex := len(gjson.Get(template, "choices.0.message.images").Array())

|

||||

imagePayload := `{"type":"image_url","image_url":{"url":""}}`

|

||||

imagePayload, _ = sjson.Set(imagePayload, "index", imageIndex)

|

||||

imagePayload, _ = sjson.Set(imagePayload, "image_url.url", imageURL)

|

||||

template, _ = sjson.Set(template, "choices.0.message.role", "assistant")

|

||||

template, _ = sjson.SetRaw(template, "choices.0.message.images.-1", imagePayload)

|

||||

}

|

||||

|

||||

@@ -6,6 +6,7 @@ package usage

|

||||

import (

|

||||

"context"

|

||||

"fmt"

|

||||

"strings"

|

||||

"sync"

|

||||

"sync/atomic"

|

||||

"time"

|

||||

@@ -90,7 +91,7 @@ type modelStats struct {

|

||||

type RequestDetail struct {

|

||||

Timestamp time.Time `json:"timestamp"`

|

||||

Source string `json:"source"`

|

||||

AuthIndex uint64 `json:"auth_index"`

|

||||

AuthIndex string `json:"auth_index"`

|

||||

Tokens TokenStats `json:"tokens"`

|

||||

Failed bool `json:"failed"`

|

||||

}

|

||||

@@ -281,6 +282,118 @@ func (s *RequestStatistics) Snapshot() StatisticsSnapshot {

|

||||

return result

|

||||

}

|

||||

|

||||

type MergeResult struct {

|

||||

Added int64 `json:"added"`

|

||||

Skipped int64 `json:"skipped"`

|

||||

}

|

||||

|

||||

// MergeSnapshot merges an exported statistics snapshot into the current store.

|

||||

// Existing data is preserved and duplicate request details are skipped.

|

||||

func (s *RequestStatistics) MergeSnapshot(snapshot StatisticsSnapshot) MergeResult {

|

||||

result := MergeResult{}

|

||||

if s == nil {

|

||||

return result

|

||||

}

|

||||

|

||||

s.mu.Lock()

|

||||

defer s.mu.Unlock()

|

||||

|

||||

seen := make(map[string]struct{})

|

||||

for apiName, stats := range s.apis {

|

||||

if stats == nil {

|

||||

continue

|

||||

}

|

||||

for modelName, modelStatsValue := range stats.Models {

|

||||

if modelStatsValue == nil {

|

||||

continue

|

||||

}

|

||||

for _, detail := range modelStatsValue.Details {

|

||||

seen[dedupKey(apiName, modelName, detail)] = struct{}{}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

for apiName, apiSnapshot := range snapshot.APIs {

|

||||

apiName = strings.TrimSpace(apiName)

|

||||

if apiName == "" {

|

||||

continue

|

||||

}

|

||||

stats, ok := s.apis[apiName]

|

||||

if !ok || stats == nil {

|

||||

stats = &apiStats{Models: make(map[string]*modelStats)}

|

||||

s.apis[apiName] = stats

|

||||

} else if stats.Models == nil {

|

||||

stats.Models = make(map[string]*modelStats)

|

||||

}

|

||||

for modelName, modelSnapshot := range apiSnapshot.Models {

|

||||

modelName = strings.TrimSpace(modelName)

|

||||

if modelName == "" {

|

||||

modelName = "unknown"

|

||||

}

|

||||

for _, detail := range modelSnapshot.Details {

|

||||

detail.Tokens = normaliseTokenStats(detail.Tokens)

|

||||

if detail.Timestamp.IsZero() {

|

||||

detail.Timestamp = time.Now()

|

||||

}

|

||||

key := dedupKey(apiName, modelName, detail)

|

||||

if _, exists := seen[key]; exists {

|

||||

result.Skipped++

|

||||

continue

|

||||

}

|

||||

seen[key] = struct{}{}

|

||||

s.recordImported(apiName, modelName, stats, detail)

|

||||

result.Added++

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

return result

|

||||

}

|

||||

|

||||

func (s *RequestStatistics) recordImported(apiName, modelName string, stats *apiStats, detail RequestDetail) {

|

||||

totalTokens := detail.Tokens.TotalTokens

|

||||

if totalTokens < 0 {

|

||||

totalTokens = 0

|

||||

}

|

||||

|

||||

s.totalRequests++

|

||||

if detail.Failed {

|

||||

s.failureCount++

|

||||

} else {

|

||||

s.successCount++

|

||||

}

|

||||

s.totalTokens += totalTokens

|

||||

|

||||

s.updateAPIStats(stats, modelName, detail)

|

||||

|

||||

dayKey := detail.Timestamp.Format("2006-01-02")

|

||||

hourKey := detail.Timestamp.Hour()

|

||||

|

||||

s.requestsByDay[dayKey]++

|

||||

s.requestsByHour[hourKey]++

|

||||

s.tokensByDay[dayKey] += totalTokens

|

||||

s.tokensByHour[hourKey] += totalTokens

|

||||

}

|

||||

|

||||

func dedupKey(apiName, modelName string, detail RequestDetail) string {

|

||||

timestamp := detail.Timestamp.UTC().Format(time.RFC3339Nano)

|

||||

tokens := normaliseTokenStats(detail.Tokens)

|

||||

return fmt.Sprintf(

|

||||

"%s|%s|%s|%s|%s|%t|%d|%d|%d|%d|%d",

|

||||

apiName,

|

||||

modelName,

|

||||

timestamp,

|

||||

detail.Source,

|

||||

detail.AuthIndex,

|

||||

detail.Failed,

|

||||

tokens.InputTokens,

|

||||

tokens.OutputTokens,

|

||||

tokens.ReasoningTokens,

|

||||

tokens.CachedTokens,

|

||||

tokens.TotalTokens,

|

||||

)

|

||||

}

|

||||

|

||||

func resolveAPIIdentifier(ctx context.Context, record coreusage.Record) string {

|

||||

if ctx != nil {

|

||||

if ginCtx, ok := ctx.Value("gin").(*gin.Context); ok && ginCtx != nil {

|

||||

@@ -340,6 +453,16 @@ func normaliseDetail(detail coreusage.Detail) TokenStats {

|

||||

return tokens

|

||||

}

|

||||

|

||||

func normaliseTokenStats(tokens TokenStats) TokenStats {

|

||||

if tokens.TotalTokens == 0 {

|

||||

tokens.TotalTokens = tokens.InputTokens + tokens.OutputTokens + tokens.ReasoningTokens

|

||||

}

|

||||

if tokens.TotalTokens == 0 {

|

||||

tokens.TotalTokens = tokens.InputTokens + tokens.OutputTokens + tokens.ReasoningTokens + tokens.CachedTokens

|

||||

}

|

||||

return tokens

|

||||

}

|

||||

|

||||

func formatHour(hour int) string {

|

||||

if hour < 0 {

|

||||

hour = 0

|

||||

|

||||

@@ -104,8 +104,8 @@ func BuildErrorResponseBody(status int, errText string) []byte {

|

||||

// Returning 0 disables keep-alives (default when unset).

|

||||

func StreamingKeepAliveInterval(cfg *config.SDKConfig) time.Duration {

|

||||

seconds := defaultStreamingKeepAliveSeconds

|

||||

if cfg != nil && cfg.Streaming.KeepAliveSeconds != nil {

|

||||

seconds = *cfg.Streaming.KeepAliveSeconds

|

||||

if cfg != nil {

|

||||

seconds = cfg.Streaming.KeepAliveSeconds

|

||||

}

|

||||

if seconds <= 0 {

|

||||

return 0

|

||||

@@ -116,8 +116,8 @@ func StreamingKeepAliveInterval(cfg *config.SDKConfig) time.Duration {

|

||||

// StreamingBootstrapRetries returns how many times a streaming request may be retried before any bytes are sent.

|

||||

func StreamingBootstrapRetries(cfg *config.SDKConfig) int {

|

||||

retries := defaultStreamingBootstrapRetries

|

||||

if cfg != nil && cfg.Streaming.BootstrapRetries != nil {

|

||||

retries = *cfg.Streaming.BootstrapRetries

|

||||

if cfg != nil {

|

||||

retries = cfg.Streaming.BootstrapRetries

|

||||

}

|

||||

if retries < 0 {

|

||||

retries = 0

|

||||

|

||||

@@ -94,12 +94,11 @@ func TestExecuteStreamWithAuthManager_RetriesBeforeFirstByte(t *testing.T) {

|

||||

registry.GetGlobalRegistry().UnregisterClient(auth2.ID)

|

||||

})

|

||||

|

||||

bootstrapRetries := 1

|

||||

handler := NewBaseAPIHandlers(&sdkconfig.SDKConfig{

|

||||

Streaming: sdkconfig.StreamingConfig{

|

||||

BootstrapRetries: &bootstrapRetries,

|

||||

BootstrapRetries: 1,

|

||||

},

|

||||

}, manager, nil)

|

||||

}, manager)

|

||||

dataChan, errChan := handler.ExecuteStreamWithAuthManager(context.Background(), "openai", "test-model", []byte(`{"model":"test-model"}`), "")

|

||||

if dataChan == nil || errChan == nil {

|

||||

t.Fatalf("expected non-nil channels")

|

||||

|

||||

@@ -203,10 +203,10 @@ func (m *Manager) Register(ctx context.Context, auth *Auth) (*Auth, error) {

|

||||

if auth == nil {

|

||||

return nil, nil

|

||||

}

|

||||

auth.EnsureIndex()

|

||||

if auth.ID == "" {

|

||||

auth.ID = uuid.NewString()

|

||||

}

|

||||

auth.EnsureIndex()

|

||||

m.mu.Lock()

|

||||

m.auths[auth.ID] = auth.Clone()

|

||||

m.mu.Unlock()

|

||||

@@ -221,7 +221,7 @@ func (m *Manager) Update(ctx context.Context, auth *Auth) (*Auth, error) {

|

||||

return nil, nil

|

||||

}

|

||||

m.mu.Lock()

|

||||

if existing, ok := m.auths[auth.ID]; ok && existing != nil && !auth.indexAssigned && auth.Index == 0 {

|

||||

if existing, ok := m.auths[auth.ID]; ok && existing != nil && !auth.indexAssigned && auth.Index == "" {

|

||||

auth.Index = existing.Index

|

||||

auth.indexAssigned = existing.indexAssigned

|

||||

}

|

||||

@@ -263,7 +263,6 @@ func (m *Manager) Execute(ctx context.Context, providers []string, req cliproxye

|

||||

return cliproxyexecutor.Response{}, &Error{Code: "provider_not_found", Message: "no provider supplied"}

|

||||

}

|

||||

rotated := m.rotateProviders(req.Model, normalized)

|

||||

defer m.advanceProviderCursor(req.Model, normalized)

|

||||

|

||||

retryTimes, maxWait := m.retrySettings()

|

||||

attempts := retryTimes + 1

|

||||

@@ -302,7 +301,6 @@ func (m *Manager) ExecuteCount(ctx context.Context, providers []string, req clip

|

||||

return cliproxyexecutor.Response{}, &Error{Code: "provider_not_found", Message: "no provider supplied"}

|

||||

}

|

||||

rotated := m.rotateProviders(req.Model, normalized)

|

||||

defer m.advanceProviderCursor(req.Model, normalized)

|

||||

|

||||

retryTimes, maxWait := m.retrySettings()

|

||||

attempts := retryTimes + 1

|

||||

@@ -341,7 +339,6 @@ func (m *Manager) ExecuteStream(ctx context.Context, providers []string, req cli

|

||||

return nil, &Error{Code: "provider_not_found", Message: "no provider supplied"}

|

||||

}

|

||||

rotated := m.rotateProviders(req.Model, normalized)

|

||||

defer m.advanceProviderCursor(req.Model, normalized)

|

||||

|

||||

retryTimes, maxWait := m.retrySettings()

|

||||

attempts := retryTimes + 1

|

||||

@@ -640,13 +637,20 @@ func (m *Manager) normalizeProviders(providers []string) []string {

|

||||

return result

|

||||

}

|

||||

|

||||

// rotateProviders returns a rotated view of the providers list starting from the

|

||||

// current offset for the model, and atomically increments the offset for the next call.

|

||||

// This ensures concurrent requests get different starting providers.

|

||||

func (m *Manager) rotateProviders(model string, providers []string) []string {

|

||||

if len(providers) == 0 {

|

||||

return nil

|

||||

}

|

||||

m.mu.RLock()

|

||||

|

||||

// Atomic read-and-increment: get current offset and advance cursor in one lock

|

||||

m.mu.Lock()

|

||||

offset := m.providerOffsets[model]

|

||||

m.mu.RUnlock()

|

||||

m.providerOffsets[model] = (offset + 1) % len(providers)

|

||||

m.mu.Unlock()

|

||||

|

||||

if len(providers) > 0 {

|

||||

offset %= len(providers)

|

||||

}

|

||||

@@ -662,19 +666,6 @@ func (m *Manager) rotateProviders(model string, providers []string) []string {

|

||||

return rotated

|

||||

}

|

||||

|

||||

func (m *Manager) advanceProviderCursor(model string, providers []string) {

|

||||

if len(providers) == 0 {

|

||||

m.mu.Lock()

|

||||

delete(m.providerOffsets, model)

|

||||

m.mu.Unlock()

|

||||

return

|

||||

}

|

||||

m.mu.Lock()

|

||||

current := m.providerOffsets[model]

|

||||

m.providerOffsets[model] = (current + 1) % len(providers)

|

||||

m.mu.Unlock()

|

||||

}

|

||||

|

||||

func (m *Manager) retrySettings() (int, time.Duration) {

|

||||

if m == nil {

|

||||

return 0, 0

|

||||

|

||||

@@ -1,11 +1,12 @@

|

||||

package auth

|

||||

|

||||

import (

|

||||

"crypto/sha256"

|

||||

"encoding/hex"

|

||||

"encoding/json"

|

||||

"strconv"

|

||||

"strings"

|

||||

"sync"

|

||||

"sync/atomic"

|

||||

"time"

|

||||

|

||||

baseauth "github.com/router-for-me/CLIProxyAPI/v6/internal/auth"

|

||||

@@ -15,8 +16,8 @@ import (

|

||||

type Auth struct {

|

||||

// ID uniquely identifies the auth record across restarts.

|

||||

ID string `json:"id"`

|

||||

// Index is a monotonically increasing runtime identifier used for diagnostics.

|

||||

Index uint64 `json:"-"`

|

||||

// Index is a stable runtime identifier derived from auth metadata (not persisted).

|

||||

Index string `json:"-"`

|

||||

// Provider is the upstream provider key (e.g. "gemini", "claude").

|

||||

Provider string `json:"provider"`

|

||||

// Prefix optionally namespaces models for routing (e.g., "teamA/gemini-3-pro-preview").

|

||||

@@ -94,12 +95,6 @@ type ModelState struct {

|

||||

UpdatedAt time.Time `json:"updated_at"`

|

||||

}

|

||||

|

||||

var authIndexCounter atomic.Uint64

|

||||

|

||||

func nextAuthIndex() uint64 {

|

||||

return authIndexCounter.Add(1) - 1

|

||||

}

|

||||

|

||||

// Clone shallow copies the Auth structure, duplicating maps to avoid accidental mutation.

|

||||

func (a *Auth) Clone() *Auth {

|

||||

if a == nil {

|

||||

@@ -128,15 +123,41 @@ func (a *Auth) Clone() *Auth {

|

||||

return ©Auth

|

||||

}

|

||||

|

||||

// EnsureIndex returns the global index, assigning one if it was not set yet.

|

||||

func (a *Auth) EnsureIndex() uint64 {

|

||||

if a == nil {

|

||||

return 0

|

||||

func stableAuthIndex(seed string) string {

|

||||

seed = strings.TrimSpace(seed)

|

||||

if seed == "" {

|

||||

return ""

|

||||

}

|

||||

if a.indexAssigned {

|

||||

sum := sha256.Sum256([]byte(seed))

|

||||

return hex.EncodeToString(sum[:8])

|

||||

}

|

||||

|

||||

// EnsureIndex returns a stable index derived from the auth file name or API key.

|

||||

func (a *Auth) EnsureIndex() string {

|

||||

if a == nil {

|

||||

return ""

|

||||

}

|

||||

if a.indexAssigned && a.Index != "" {

|

||||

return a.Index

|

||||

}

|

||||

idx := nextAuthIndex()

|

||||

|

||||

seed := strings.TrimSpace(a.FileName)

|

||||

if seed != "" {

|

||||

seed = "file:" + seed

|

||||

} else if a.Attributes != nil {

|

||||

if apiKey := strings.TrimSpace(a.Attributes["api_key"]); apiKey != "" {

|

||||

seed = "api_key:" + apiKey

|

||||

}

|

||||

}

|

||||

if seed == "" {

|

||||

if id := strings.TrimSpace(a.ID); id != "" {

|

||||

seed = "id:" + id

|

||||

} else {

|

||||

return ""

|

||||

}

|

||||

}

|

||||

|

||||

idx := stableAuthIndex(seed)

|

||||

a.Index = idx

|

||||

a.indexAssigned = true

|

||||

return idx

|

||||

|

||||

@@ -14,7 +14,7 @@ type Record struct {

|

||||

Model string

|

||||

APIKey string

|

||||

AuthID string

|

||||

AuthIndex uint64

|

||||

AuthIndex string

|

||||

Source string

|

||||

RequestedAt time.Time

|

||||

Failed bool

|

||||

|

||||

Reference in New Issue

Block a user