mirror of

https://github.com/router-for-me/CLIProxyAPI.git

synced 2026-02-02 12:30:50 +08:00

Compare commits

107 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

750b930679 | ||

|

|

3902fd7501 | ||

|

|

4fc3d5e935 | ||

|

|

2d2f4572a7 | ||

|

|

8f4c46f38d | ||

|

|

b6ba51bc2a | ||

|

|

6a66d32d37 | ||

|

|

8d15723195 | ||

|

|

736e0aae86 | ||

|

|

8bf3305b2b | ||

|

|

d00e3ea973 | ||

|

|

89db4e9481 | ||

|

|

e332419081 | ||

|

|

e998b1229a | ||

|

|

bbed134bd1 | ||

|

|

47b9503112 | ||

|

|

3b9253c2be | ||

|

|

d241359153 | ||

|

|

f4d4249ba5 | ||

|

|

cb56cb250e | ||

|

|

e0381a6ae0 | ||

|

|

2c01b2ef64 | ||

|

|

e947266743 | ||

|

|

c6b0e85b54 | ||

|

|

26efbed05c | ||

|

|

96340bf136 | ||

|

|

b055e00c1a | ||

|

|

857c880f99 | ||

|

|

ce7474d953 | ||

|

|

70fdd70b84 | ||

|

|

08ab6a7d77 | ||

|

|

9fa2a7e9df | ||

|

|

d443c86620 | ||

|

|

7be3f1c36c | ||

|

|

f6ab6d97b9 | ||

|

|

bc866bac49 | ||

|

|

50e6d845f4 | ||

|

|

a8cb01819d | ||

|

|

530273906b | ||

|

|

06ddf575d9 | ||

|

|

3099114cbb | ||

|

|

44b63f0767 | ||

|

|

6705d20194 | ||

|

|

a38a9c0b0f | ||

|

|

8286caa366 | ||

|

|

bd1ec8424d | ||

|

|

225e2c6797 | ||

|

|

d8fc485513 | ||

|

|

f137eb0ac4 | ||

|

|

f39a460487 | ||

|

|

ee171bc563 | ||

|

|

a95428f204 | ||

|

|

3ca5fb1046 | ||

|

|

a091d12f4e | ||

|

|

457924828a | ||

|

|

aca2ef6359 | ||

|

|

ade7194792 | ||

|

|

3a436e116a | ||

|

|

336867853b | ||

|

|

6403ff4ec4 | ||

|

|

d222469b44 | ||

|

|

7646a2b877 | ||

|

|

62090f2568 | ||

|

|

c281f4cbaf | ||

|

|

09455f9e85 | ||

|

|

c8e72ba0dc | ||

|

|

375ef252ab | ||

|

|

ee552f8720 | ||

|

|

2e88c4858e | ||

|

|

3f50da85c1 | ||

|

|

8be06255f7 | ||

|

|

72274099aa | ||

|

|

dcae098e23 | ||

|

|

2eb05ec640 | ||

|

|

3ce0d76aa4 | ||

|

|

a00b79d9be | ||

|

|

33e53a2a56 | ||

|

|

cd5b80785f | ||

|

|

54f71aa273 | ||

|

|

3f949b7f84 | ||

|

|

443c4538bb | ||

|

|

a7fc2ee4cf | ||

|

|

8e749ac22d | ||

|

|

69e09d9bc7 | ||

|

|

06ad527e8c | ||

|

|

b7409dd2de | ||

|

|

5ba325a8fc | ||

|

|

d502840f91 | ||

|

|

99238a4b59 | ||

|

|

6d43a2ff9a | ||

|

|

3faa1ca9af | ||

|

|

9d975e0375 | ||

|

|

2a6d8b78d4 | ||

|

|

671558a822 | ||

|

|

26fbb77901 | ||

|

|

a277302262 | ||

|

|

969c1a5b72 | ||

|

|

872339bceb | ||

|

|

5dc0dbc7aa | ||

|

|

2b7ba54a2f | ||

|

|

007c3304f2 | ||

|

|

e76ba0ede9 | ||

|

|

c06ac07e23 | ||

|

|

8d25cf0d75 | ||

|

|

64e85e7019 | ||

|

|

0c0aae1eac | ||

|

|

5dcf7cb846 |

@@ -13,8 +13,6 @@ Dockerfile

|

||||

docs/*

|

||||

README.md

|

||||

README_CN.md

|

||||

MANAGEMENT_API.md

|

||||

MANAGEMENT_API_CN.md

|

||||

LICENSE

|

||||

|

||||

# Runtime data folders (should be mounted as volumes)

|

||||

@@ -25,10 +23,14 @@ config.yaml

|

||||

|

||||

# Development/editor

|

||||

bin/*

|

||||

.claude/*

|

||||

.vscode/*

|

||||

.claude/*

|

||||

.codex/*

|

||||

.gemini/*

|

||||

.serena/*

|

||||

.agent/*

|

||||

.agents/*

|

||||

.opencode/*

|

||||

.bmad/*

|

||||

_bmad/*

|

||||

_bmad-output/*

|

||||

|

||||

7

.github/ISSUE_TEMPLATE/bug_report.md

vendored

7

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -7,6 +7,13 @@ assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Is it a request payload issue?**

|

||||

[ ] Yes, this is a request payload issue. I am using a client/cURL to send a request payload, but I received an unexpected error.

|

||||

[ ] No, it's another issue.

|

||||

|

||||

**If it's a request payload issue, you MUST know**

|

||||

Our team doesn't have any GODs or ORACLEs or MIND READERs. Please make sure to attach the request log or curl payload.

|

||||

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

|

||||

11

.gitignore

vendored

11

.gitignore

vendored

@@ -11,11 +11,15 @@ bin/*

|

||||

logs/*

|

||||

conv/*

|

||||

temp/*

|

||||

refs/*

|

||||

|

||||

# Storage backends

|

||||

pgstore/*

|

||||

gitstore/*

|

||||

objectstore/*

|

||||

|

||||

# Static assets

|

||||

static/*

|

||||

refs/*

|

||||

|

||||

# Authentication data

|

||||

auths/*

|

||||

@@ -29,12 +33,17 @@ GEMINI.md

|

||||

|

||||

# Tooling metadata

|

||||

.vscode/*

|

||||

.codex/*

|

||||

.claude/*

|

||||

.gemini/*

|

||||

.serena/*

|

||||

.agent/*

|

||||

.agents/*

|

||||

.agents/*

|

||||

.opencode/*

|

||||

.bmad/*

|

||||

_bmad/*

|

||||

_bmad-output/*

|

||||

|

||||

# macOS

|

||||

.DS_Store

|

||||

|

||||

12

README.md

12

README.md

@@ -10,11 +10,11 @@ So you can use local or multi-account CLI access with OpenAI(include Responses)/

|

||||

|

||||

## Sponsor

|

||||

|

||||

[](https://z.ai/subscribe?ic=8JVLJQFSKB)

|

||||

[](https://z.ai/subscribe?ic=8JVLJQFSKB)

|

||||

|

||||

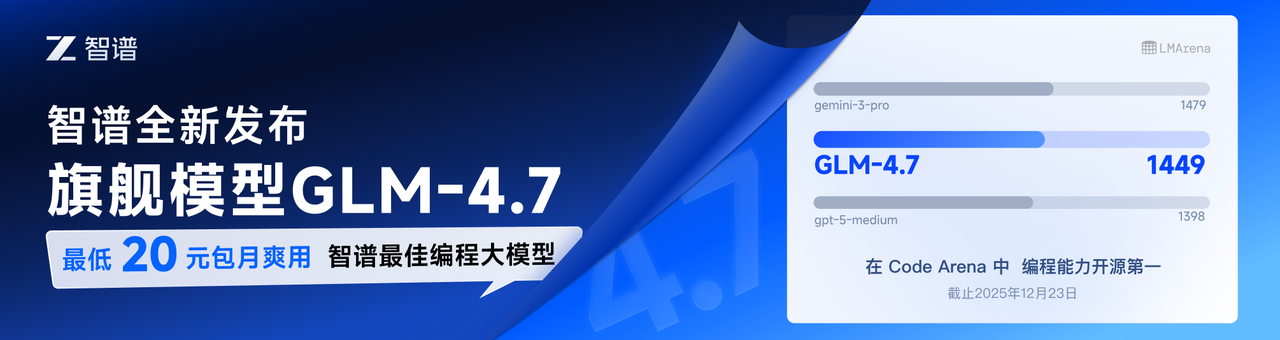

This project is sponsored by Z.ai, supporting us with their GLM CODING PLAN.

|

||||

|

||||

GLM CODING PLAN is a subscription service designed for AI coding, starting at just $3/month. It provides access to their flagship GLM-4.6 model across 10+ popular AI coding tools (Claude Code, Cline, Roo Code, etc.), offering developers top-tier, fast, and stable coding experiences.

|

||||

GLM CODING PLAN is a subscription service designed for AI coding, starting at just $3/month. It provides access to their flagship GLM-4.7 model across 10+ popular AI coding tools (Claude Code, Cline, Roo Code, etc.), offering developers top-tier, fast, and stable coding experiences.

|

||||

|

||||

Get 10% OFF GLM CODING PLAN:https://z.ai/subscribe?ic=8JVLJQFSKB

|

||||

|

||||

@@ -26,6 +26,10 @@ Get 10% OFF GLM CODING PLAN:https://z.ai/subscribe?ic=8JVLJQFSKB

|

||||

<td width="180"><a href="https://www.packyapi.com/register?aff=cliproxyapi"><img src="./assets/packycode.png" alt="PackyCode" width="150"></a></td>

|

||||

<td>Thanks to PackyCode for sponsoring this project! PackyCode is a reliable and efficient API relay service provider, offering relay services for Claude Code, Codex, Gemini, and more. PackyCode provides special discounts for our software users: register using <a href="https://www.packyapi.com/register?aff=cliproxyapi">this link</a> and enter the "cliproxyapi" promo code during recharge to get 10% off.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="180"><a href="https://cubence.com/signup?code=CLIPROXYAPI&source=cpa"><img src="./assets/cubence.png" alt="Cubence" width="150"></a></td>

|

||||

<td>Thanks to Cubence for sponsoring this project! Cubence is a reliable and efficient API relay service provider, offering relay services for Claude Code, Codex, Gemini, and more. Cubence provides special discounts for our software users: register using <a href="https://cubence.com/signup?code=CLIPROXYAPI&source=cpa">this link</a> and enter the "CLIPROXYAPI" promo code during recharge to get 10% off.</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|

||||

@@ -110,6 +114,10 @@ CLI wrapper for instant switching between multiple Claude accounts and alternati

|

||||

|

||||

Native macOS GUI for managing CLIProxyAPI: configure providers, model mappings, and endpoints via OAuth - no API keys needed.

|

||||

|

||||

### [Quotio](https://github.com/nguyenphutrong/quotio)

|

||||

|

||||

Native macOS menu bar app that unifies Claude, Gemini, OpenAI, Qwen, and Antigravity subscriptions with real-time quota tracking and smart auto-failover for AI coding tools like Claude Code, OpenCode, and Droid - no API keys needed.

|

||||

|

||||

> [!NOTE]

|

||||

> If you developed a project based on CLIProxyAPI, please open a PR to add it to this list.

|

||||

|

||||

|

||||

13

README_CN.md

13

README_CN.md

@@ -10,11 +10,11 @@

|

||||

|

||||

## 赞助商

|

||||

|

||||

[](https://www.bigmodel.cn/claude-code?ic=RRVJPB5SII)

|

||||

[](https://www.bigmodel.cn/claude-code?ic=RRVJPB5SII)

|

||||

|

||||

本项目由 Z智谱 提供赞助, 他们通过 GLM CODING PLAN 对本项目提供技术支持。

|

||||

|

||||

GLM CODING PLAN 是专为AI编码打造的订阅套餐,每月最低仅需20元,即可在十余款主流AI编码工具如 Claude Code、Cline、Roo Code 中畅享智谱旗舰模型GLM-4.6,为开发者提供顶尖的编码体验。

|

||||

GLM CODING PLAN 是专为AI编码打造的订阅套餐,每月最低仅需20元,即可在十余款主流AI编码工具如 Claude Code、Cline、Roo Code 中畅享智谱旗舰模型GLM-4.7,为开发者提供顶尖的编码体验。

|

||||

|

||||

智谱AI为本软件提供了特别优惠,使用以下链接购买可以享受九折优惠:https://www.bigmodel.cn/claude-code?ic=RRVJPB5SII

|

||||

|

||||

@@ -26,9 +26,14 @@ GLM CODING PLAN 是专为AI编码打造的订阅套餐,每月最低仅需20元

|

||||

<td width="180"><a href="https://www.packyapi.com/register?aff=cliproxyapi"><img src="./assets/packycode.png" alt="PackyCode" width="150"></a></td>

|

||||

<td>感谢 PackyCode 对本项目的赞助!PackyCode 是一家可靠高效的 API 中转服务商,提供 Claude Code、Codex、Gemini 等多种服务的中转。PackyCode 为本软件用户提供了特别优惠:使用<a href="https://www.packyapi.com/register?aff=cliproxyapi">此链接</a>注册,并在充值时输入 "cliproxyapi" 优惠码即可享受九折优惠。</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="180"><a href="https://cubence.com/signup?code=CLIPROXYAPI&source=cpa"><img src="./assets/cubence.png" alt="Cubence" width="150"></a></td>

|

||||

<td>感谢 Cubence 对本项目的赞助!Cubence 是一家可靠高效的 API 中转服务商,提供 Claude Code、Codex、Gemini 等多种服务的中转。Cubence 为本软件用户提供了特别优惠:使用<a href="https://cubence.com/signup?code=CLIPROXYAPI&source=cpa">此链接</a>注册,并在充值时输入 "CLIPROXYAPI" 优惠码即可享受九折优惠。</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|

||||

|

||||

## 功能特性

|

||||

|

||||

- 为 CLI 模型提供 OpenAI/Gemini/Claude/Codex 兼容的 API 端点

|

||||

@@ -108,6 +113,10 @@ CLI 封装器,用于通过 CLIProxyAPI OAuth 即时切换多个 Claude 账户

|

||||

|

||||

基于 macOS 平台的原生 CLIProxyAPI GUI:配置供应商、模型映射以及OAuth端点,无需 API 密钥。

|

||||

|

||||

### [Quotio](https://github.com/nguyenphutrong/quotio)

|

||||

|

||||

原生 macOS 菜单栏应用,统一管理 Claude、Gemini、OpenAI、Qwen 和 Antigravity 订阅,提供实时配额追踪和智能自动故障转移,支持 Claude Code、OpenCode 和 Droid 等 AI 编程工具,无需 API 密钥。

|

||||

|

||||

> [!NOTE]

|

||||

> 如果你开发了基于 CLIProxyAPI 的项目,请提交一个 PR(拉取请求)将其添加到此列表中。

|

||||

|

||||

|

||||

BIN

assets/cubence.png

Normal file

BIN

assets/cubence.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 51 KiB |

@@ -405,7 +405,7 @@ func main() {

|

||||

usage.SetStatisticsEnabled(cfg.UsageStatisticsEnabled)

|

||||

coreauth.SetQuotaCooldownDisabled(cfg.DisableCooling)

|

||||

|

||||

if err = logging.ConfigureLogOutput(cfg.LoggingToFile, cfg.LogsMaxTotalSizeMB); err != nil {

|

||||

if err = logging.ConfigureLogOutput(cfg); err != nil {

|

||||

log.Errorf("failed to configure log output: %v", err)

|

||||

return

|

||||

}

|

||||

|

||||

@@ -35,10 +35,14 @@ auth-dir: "~/.cli-proxy-api"

|

||||

api-keys:

|

||||

- "your-api-key-1"

|

||||

- "your-api-key-2"

|

||||

- "your-api-key-3"

|

||||

|

||||

# Enable debug logging

|

||||

debug: false

|

||||

|

||||

# When true, disable high-overhead HTTP middleware features to reduce per-request memory usage under high concurrency.

|

||||

commercial-mode: false

|

||||

|

||||

# When true, write application logs to rotating files instead of stdout

|

||||

logging-to-file: false

|

||||

|

||||

@@ -86,6 +90,9 @@ ws-auth: false

|

||||

# headers:

|

||||

# X-Custom-Header: "custom-value"

|

||||

# proxy-url: "socks5://proxy.example.com:1080"

|

||||

# models:

|

||||

# - name: "gemini-2.5-flash" # upstream model name

|

||||

# alias: "gemini-flash" # client alias mapped to the upstream model

|

||||

# excluded-models:

|

||||

# - "gemini-2.5-pro" # exclude specific models from this provider (exact match)

|

||||

# - "gemini-2.5-*" # wildcard matching prefix (e.g. gemini-2.5-flash, gemini-2.5-pro)

|

||||

@@ -101,6 +108,9 @@ ws-auth: false

|

||||

# headers:

|

||||

# X-Custom-Header: "custom-value"

|

||||

# proxy-url: "socks5://proxy.example.com:1080" # optional: per-key proxy override

|

||||

# models:

|

||||

# - name: "gpt-5-codex" # upstream model name

|

||||

# alias: "codex-latest" # client alias mapped to the upstream model

|

||||

# excluded-models:

|

||||

# - "gpt-5.1" # exclude specific models (exact match)

|

||||

# - "gpt-5-*" # wildcard matching prefix (e.g. gpt-5-medium, gpt-5-codex)

|

||||

@@ -118,7 +128,7 @@ ws-auth: false

|

||||

# proxy-url: "socks5://proxy.example.com:1080" # optional: per-key proxy override

|

||||

# models:

|

||||

# - name: "claude-3-5-sonnet-20241022" # upstream model name

|

||||

# alias: "claude-sonnet-latest" # client alias mapped to the upstream model

|

||||

# alias: "claude-sonnet-latest" # client alias mapped to the upstream model

|

||||

# excluded-models:

|

||||

# - "claude-opus-4-5-20251101" # exclude specific models (exact match)

|

||||

# - "claude-3-*" # wildcard matching prefix (e.g. claude-3-7-sonnet-20250219)

|

||||

@@ -149,9 +159,9 @@ ws-auth: false

|

||||

# headers:

|

||||

# X-Custom-Header: "custom-value"

|

||||

# models: # optional: map aliases to upstream model names

|

||||

# - name: "gemini-2.0-flash" # upstream model name

|

||||

# - name: "gemini-2.5-flash" # upstream model name

|

||||

# alias: "vertex-flash" # client-visible alias

|

||||

# - name: "gemini-1.5-pro"

|

||||

# - name: "gemini-2.5-pro"

|

||||

# alias: "vertex-pro"

|

||||

|

||||

# Amp Integration

|

||||

@@ -160,6 +170,18 @@ ws-auth: false

|

||||

# upstream-url: "https://ampcode.com"

|

||||

# # Optional: Override API key for Amp upstream (otherwise uses env or file)

|

||||

# upstream-api-key: ""

|

||||

# # Per-client upstream API key mapping

|

||||

# # Maps client API keys (from top-level api-keys) to different Amp upstream API keys.

|

||||

# # Useful when different clients need to use different Amp accounts/quotas.

|

||||

# # If a client key isn't mapped, falls back to upstream-api-key (default behavior).

|

||||

# upstream-api-keys:

|

||||

# - upstream-api-key: "amp_key_for_team_a" # Upstream key to use for these clients

|

||||

# api-keys: # Client keys that use this upstream key

|

||||

# - "your-api-key-1"

|

||||

# - "your-api-key-2"

|

||||

# - upstream-api-key: "amp_key_for_team_b"

|

||||

# api-keys:

|

||||

# - "your-api-key-3"

|

||||

# # Restrict Amp management routes (/api/auth, /api/user, etc.) to localhost only (default: false)

|

||||

# restrict-management-to-localhost: false

|

||||

# # Force model mappings to run before checking local API keys (default: false)

|

||||

@@ -169,12 +191,42 @@ ws-auth: false

|

||||

# # Useful when Amp CLI requests models you don't have access to (e.g., Claude Opus 4.5)

|

||||

# # but you have a similar model available (e.g., Claude Sonnet 4).

|

||||

# model-mappings:

|

||||

# - from: "claude-opus-4.5" # Model requested by Amp CLI

|

||||

# to: "claude-sonnet-4" # Route to this available model instead

|

||||

# - from: "gpt-5"

|

||||

# to: "gemini-2.5-pro"

|

||||

# - from: "claude-3-opus-20240229"

|

||||

# to: "claude-3-5-sonnet-20241022"

|

||||

# - from: "claude-opus-4-5-20251101" # Model requested by Amp CLI

|

||||

# to: "gemini-claude-opus-4-5-thinking" # Route to this available model instead

|

||||

# - from: "claude-sonnet-4-5-20250929"

|

||||

# to: "gemini-claude-sonnet-4-5-thinking"

|

||||

# - from: "claude-haiku-4-5-20251001"

|

||||

# to: "gemini-2.5-flash"

|

||||

|

||||

# Global OAuth model name mappings (per channel)

|

||||

# These mappings rename model IDs for both model listing and request routing.

|

||||

# Supported channels: gemini-cli, vertex, aistudio, antigravity, claude, codex, qwen, iflow.

|

||||

# NOTE: Mappings do not apply to gemini-api-key, codex-api-key, claude-api-key, openai-compatibility, vertex-api-key, or ampcode.

|

||||

# oauth-model-mappings:

|

||||

# gemini-cli:

|

||||

# - name: "gemini-2.5-pro" # original model name under this channel

|

||||

# alias: "g2.5p" # client-visible alias

|

||||

# vertex:

|

||||

# - name: "gemini-2.5-pro"

|

||||

# alias: "g2.5p"

|

||||

# aistudio:

|

||||

# - name: "gemini-2.5-pro"

|

||||

# alias: "g2.5p"

|

||||

# antigravity:

|

||||

# - name: "gemini-3-pro-preview"

|

||||

# alias: "g3p"

|

||||

# claude:

|

||||

# - name: "claude-sonnet-4-5-20250929"

|

||||

# alias: "cs4.5"

|

||||

# codex:

|

||||

# - name: "gpt-5"

|

||||

# alias: "g5"

|

||||

# qwen:

|

||||

# - name: "qwen3-coder-plus"

|

||||

# alias: "qwen-plus"

|

||||

# iflow:

|

||||

# - name: "glm-4.7"

|

||||

# alias: "glm-god"

|

||||

|

||||

# OAuth provider excluded models

|

||||

# oauth-excluded-models:

|

||||

|

||||

538

internal/api/handlers/management/api_tools.go

Normal file

538

internal/api/handlers/management/api_tools.go

Normal file

@@ -0,0 +1,538 @@

|

||||

package management

|

||||

|

||||

import (

|

||||

"context"

|

||||

"encoding/json"

|

||||

"fmt"

|

||||

"io"

|

||||

"net"

|

||||

"net/http"

|

||||

"net/url"

|

||||

"strings"

|

||||

"time"

|

||||

|

||||

"github.com/gin-gonic/gin"

|

||||

"github.com/router-for-me/CLIProxyAPI/v6/internal/runtime/geminicli"

|

||||

coreauth "github.com/router-for-me/CLIProxyAPI/v6/sdk/cliproxy/auth"

|

||||

log "github.com/sirupsen/logrus"

|

||||

"golang.org/x/net/proxy"

|

||||

"golang.org/x/oauth2"

|

||||

"golang.org/x/oauth2/google"

|

||||

)

|

||||

|

||||

const defaultAPICallTimeout = 60 * time.Second

|

||||

|

||||

const (

|

||||

geminiOAuthClientID = "681255809395-oo8ft2oprdrnp9e3aqf6av3hmdib135j.apps.googleusercontent.com"

|

||||

geminiOAuthClientSecret = "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"

|

||||

)

|

||||

|

||||

var geminiOAuthScopes = []string{

|

||||

"https://www.googleapis.com/auth/cloud-platform",

|

||||

"https://www.googleapis.com/auth/userinfo.email",

|

||||

"https://www.googleapis.com/auth/userinfo.profile",

|

||||

}

|

||||

|

||||

type apiCallRequest struct {

|

||||

AuthIndexSnake *string `json:"auth_index"`

|

||||

AuthIndexCamel *string `json:"authIndex"`

|

||||

AuthIndexPascal *string `json:"AuthIndex"`

|

||||

Method string `json:"method"`

|

||||

URL string `json:"url"`

|

||||

Header map[string]string `json:"header"`

|

||||

Data string `json:"data"`

|

||||

}

|

||||

|

||||

type apiCallResponse struct {

|

||||

StatusCode int `json:"status_code"`

|

||||

Header map[string][]string `json:"header"`

|

||||

Body string `json:"body"`

|

||||

}

|

||||

|

||||

// APICall makes a generic HTTP request on behalf of the management API caller.

|

||||

// It is protected by the management middleware.

|

||||

//

|

||||

// Endpoint:

|

||||

//

|

||||

// POST /v0/management/api-call

|

||||

//

|

||||

// Authentication:

|

||||

//

|

||||

// Same as other management APIs (requires a management key and remote-management rules).

|

||||

// You can provide the key via:

|

||||

// - Authorization: Bearer <key>

|

||||

// - X-Management-Key: <key>

|

||||

//

|

||||

// Request JSON:

|

||||

// - auth_index / authIndex / AuthIndex (optional):

|

||||

// The credential "auth_index" from GET /v0/management/auth-files (or other endpoints returning it).

|

||||

// If omitted or not found, credential-specific proxy/token substitution is skipped.

|

||||

// - method (required): HTTP method, e.g. GET, POST, PUT, PATCH, DELETE.

|

||||

// - url (required): Absolute URL including scheme and host, e.g. "https://api.example.com/v1/ping".

|

||||

// - header (optional): Request headers map.

|

||||

// Supports magic variable "$TOKEN$" which is replaced using the selected credential:

|

||||

// 1) metadata.access_token

|

||||

// 2) attributes.api_key

|

||||

// 3) metadata.token / metadata.id_token / metadata.cookie

|

||||

// Example: {"Authorization":"Bearer $TOKEN$"}.

|

||||

// Note: if you need to override the HTTP Host header, set header["Host"].

|

||||

// - data (optional): Raw request body as string (useful for POST/PUT/PATCH).

|

||||

//

|

||||

// Proxy selection (highest priority first):

|

||||

// 1. Selected credential proxy_url

|

||||

// 2. Global config proxy-url

|

||||

// 3. Direct connect (environment proxies are not used)

|

||||

//

|

||||

// Response JSON (returned with HTTP 200 when the APICall itself succeeds):

|

||||

// - status_code: Upstream HTTP status code.

|

||||

// - header: Upstream response headers.

|

||||

// - body: Upstream response body as string.

|

||||

//

|

||||

// Example:

|

||||

//

|

||||

// curl -sS -X POST "http://127.0.0.1:8317/v0/management/api-call" \

|

||||

// -H "Authorization: Bearer <MANAGEMENT_KEY>" \

|

||||

// -H "Content-Type: application/json" \

|

||||

// -d '{"auth_index":"<AUTH_INDEX>","method":"GET","url":"https://api.example.com/v1/ping","header":{"Authorization":"Bearer $TOKEN$"}}'

|

||||

//

|

||||

// curl -sS -X POST "http://127.0.0.1:8317/v0/management/api-call" \

|

||||

// -H "Authorization: Bearer 831227" \

|

||||

// -H "Content-Type: application/json" \

|

||||

// -d '{"auth_index":"<AUTH_INDEX>","method":"POST","url":"https://api.example.com/v1/fetchAvailableModels","header":{"Authorization":"Bearer $TOKEN$","Content-Type":"application/json","User-Agent":"cliproxyapi"},"data":"{}"}'

|

||||

func (h *Handler) APICall(c *gin.Context) {

|

||||

var body apiCallRequest

|

||||

if errBindJSON := c.ShouldBindJSON(&body); errBindJSON != nil {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid body"})

|

||||

return

|

||||

}

|

||||

|

||||

method := strings.ToUpper(strings.TrimSpace(body.Method))

|

||||

if method == "" {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "missing method"})

|

||||

return

|

||||

}

|

||||

|

||||

urlStr := strings.TrimSpace(body.URL)

|

||||

if urlStr == "" {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "missing url"})

|

||||

return

|

||||

}

|

||||

parsedURL, errParseURL := url.Parse(urlStr)

|

||||

if errParseURL != nil || parsedURL.Scheme == "" || parsedURL.Host == "" {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid url"})

|

||||

return

|

||||

}

|

||||

|

||||

authIndex := firstNonEmptyString(body.AuthIndexSnake, body.AuthIndexCamel, body.AuthIndexPascal)

|

||||

auth := h.authByIndex(authIndex)

|

||||

|

||||

reqHeaders := body.Header

|

||||

if reqHeaders == nil {

|

||||

reqHeaders = map[string]string{}

|

||||

}

|

||||

|

||||

var hostOverride string

|

||||

var token string

|

||||

var tokenResolved bool

|

||||

var tokenErr error

|

||||

for key, value := range reqHeaders {

|

||||

if !strings.Contains(value, "$TOKEN$") {

|

||||

continue

|

||||

}

|

||||

if !tokenResolved {

|

||||

token, tokenErr = h.resolveTokenForAuth(c.Request.Context(), auth)

|

||||

tokenResolved = true

|

||||

}

|

||||

if auth != nil && token == "" {

|

||||

if tokenErr != nil {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "auth token refresh failed"})

|

||||

return

|

||||

}

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "auth token not found"})

|

||||

return

|

||||

}

|

||||

if token == "" {

|

||||

continue

|

||||

}

|

||||

reqHeaders[key] = strings.ReplaceAll(value, "$TOKEN$", token)

|

||||

}

|

||||

|

||||

var requestBody io.Reader

|

||||

if body.Data != "" {

|

||||

requestBody = strings.NewReader(body.Data)

|

||||

}

|

||||

|

||||

req, errNewRequest := http.NewRequestWithContext(c.Request.Context(), method, urlStr, requestBody)

|

||||

if errNewRequest != nil {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "failed to build request"})

|

||||

return

|

||||

}

|

||||

|

||||

for key, value := range reqHeaders {

|

||||

if strings.EqualFold(key, "host") {

|

||||

hostOverride = strings.TrimSpace(value)

|

||||

continue

|

||||

}

|

||||

req.Header.Set(key, value)

|

||||

}

|

||||

if hostOverride != "" {

|

||||

req.Host = hostOverride

|

||||

}

|

||||

|

||||

httpClient := &http.Client{

|

||||

Timeout: defaultAPICallTimeout,

|

||||

}

|

||||

httpClient.Transport = h.apiCallTransport(auth)

|

||||

|

||||

resp, errDo := httpClient.Do(req)

|

||||

if errDo != nil {

|

||||

log.WithError(errDo).Debug("management APICall request failed")

|

||||

c.JSON(http.StatusBadGateway, gin.H{"error": "request failed"})

|

||||

return

|

||||

}

|

||||

defer func() {

|

||||

if errClose := resp.Body.Close(); errClose != nil {

|

||||

log.Errorf("response body close error: %v", errClose)

|

||||

}

|

||||

}()

|

||||

|

||||

respBody, errReadAll := io.ReadAll(resp.Body)

|

||||

if errReadAll != nil {

|

||||

c.JSON(http.StatusBadGateway, gin.H{"error": "failed to read response"})

|

||||

return

|

||||

}

|

||||

|

||||

c.JSON(http.StatusOK, apiCallResponse{

|

||||

StatusCode: resp.StatusCode,

|

||||

Header: resp.Header,

|

||||

Body: string(respBody),

|

||||

})

|

||||

}

|

||||

|

||||

func firstNonEmptyString(values ...*string) string {

|

||||

for _, v := range values {

|

||||

if v == nil {

|

||||

continue

|

||||

}

|

||||

if out := strings.TrimSpace(*v); out != "" {

|

||||

return out

|

||||

}

|

||||

}

|

||||

return ""

|

||||

}

|

||||

|

||||

func tokenValueForAuth(auth *coreauth.Auth) string {

|

||||

if auth == nil {

|

||||

return ""

|

||||

}

|

||||

if v := tokenValueFromMetadata(auth.Metadata); v != "" {

|

||||

return v

|

||||

}

|

||||

if auth.Attributes != nil {

|

||||

if v := strings.TrimSpace(auth.Attributes["api_key"]); v != "" {

|

||||

return v

|

||||

}

|

||||

}

|

||||

if shared := geminicli.ResolveSharedCredential(auth.Runtime); shared != nil {

|

||||

if v := tokenValueFromMetadata(shared.MetadataSnapshot()); v != "" {

|

||||

return v

|

||||

}

|

||||

}

|

||||

return ""

|

||||

}

|

||||

|

||||

func (h *Handler) resolveTokenForAuth(ctx context.Context, auth *coreauth.Auth) (string, error) {

|

||||

if auth == nil {

|

||||

return "", nil

|

||||

}

|

||||

|

||||

provider := strings.ToLower(strings.TrimSpace(auth.Provider))

|

||||

if provider == "gemini-cli" {

|

||||

token, errToken := h.refreshGeminiOAuthAccessToken(ctx, auth)

|

||||

return token, errToken

|

||||

}

|

||||

|

||||

return tokenValueForAuth(auth), nil

|

||||

}

|

||||

|

||||

func (h *Handler) refreshGeminiOAuthAccessToken(ctx context.Context, auth *coreauth.Auth) (string, error) {

|

||||

if ctx == nil {

|

||||

ctx = context.Background()

|

||||

}

|

||||

if auth == nil {

|

||||

return "", nil

|

||||

}

|

||||

|

||||

metadata, updater := geminiOAuthMetadata(auth)

|

||||

if len(metadata) == 0 {

|

||||

return "", fmt.Errorf("gemini oauth metadata missing")

|

||||

}

|

||||

|

||||

base := make(map[string]any)

|

||||

if tokenRaw, ok := metadata["token"].(map[string]any); ok && tokenRaw != nil {

|

||||

base = cloneMap(tokenRaw)

|

||||

}

|

||||

|

||||

var token oauth2.Token

|

||||

if len(base) > 0 {

|

||||

if raw, errMarshal := json.Marshal(base); errMarshal == nil {

|

||||

_ = json.Unmarshal(raw, &token)

|

||||

}

|

||||

}

|

||||

|

||||

if token.AccessToken == "" {

|

||||

token.AccessToken = stringValue(metadata, "access_token")

|

||||

}

|

||||

if token.RefreshToken == "" {

|

||||

token.RefreshToken = stringValue(metadata, "refresh_token")

|

||||

}

|

||||

if token.TokenType == "" {

|

||||

token.TokenType = stringValue(metadata, "token_type")

|

||||

}

|

||||

if token.Expiry.IsZero() {

|

||||

if expiry := stringValue(metadata, "expiry"); expiry != "" {

|

||||

if ts, errParseTime := time.Parse(time.RFC3339, expiry); errParseTime == nil {

|

||||

token.Expiry = ts

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

conf := &oauth2.Config{

|

||||

ClientID: geminiOAuthClientID,

|

||||

ClientSecret: geminiOAuthClientSecret,

|

||||

Scopes: geminiOAuthScopes,

|

||||

Endpoint: google.Endpoint,

|

||||

}

|

||||

|

||||

ctxToken := ctx

|

||||

httpClient := &http.Client{

|

||||

Timeout: defaultAPICallTimeout,

|

||||

Transport: h.apiCallTransport(auth),

|

||||

}

|

||||

ctxToken = context.WithValue(ctxToken, oauth2.HTTPClient, httpClient)

|

||||

|

||||

src := conf.TokenSource(ctxToken, &token)

|

||||

currentToken, errToken := src.Token()

|

||||

if errToken != nil {

|

||||

return "", errToken

|

||||

}

|

||||

|

||||

merged := buildOAuthTokenMap(base, currentToken)

|

||||

fields := buildOAuthTokenFields(currentToken, merged)

|

||||

if updater != nil {

|

||||

updater(fields)

|

||||

}

|

||||

return strings.TrimSpace(currentToken.AccessToken), nil

|

||||

}

|

||||

|

||||

func geminiOAuthMetadata(auth *coreauth.Auth) (map[string]any, func(map[string]any)) {

|

||||

if auth == nil {

|

||||

return nil, nil

|

||||

}

|

||||

if shared := geminicli.ResolveSharedCredential(auth.Runtime); shared != nil {

|

||||

snapshot := shared.MetadataSnapshot()

|

||||

return snapshot, func(fields map[string]any) { shared.MergeMetadata(fields) }

|

||||

}

|

||||

return auth.Metadata, func(fields map[string]any) {

|

||||

if auth.Metadata == nil {

|

||||

auth.Metadata = make(map[string]any)

|

||||

}

|

||||

for k, v := range fields {

|

||||

auth.Metadata[k] = v

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

func stringValue(metadata map[string]any, key string) string {

|

||||

if len(metadata) == 0 || key == "" {

|

||||

return ""

|

||||

}

|

||||

if v, ok := metadata[key].(string); ok {

|

||||

return strings.TrimSpace(v)

|

||||

}

|

||||

return ""

|

||||

}

|

||||

|

||||

func cloneMap(in map[string]any) map[string]any {

|

||||

if len(in) == 0 {

|

||||

return nil

|

||||

}

|

||||

out := make(map[string]any, len(in))

|

||||

for k, v := range in {

|

||||

out[k] = v

|

||||

}

|

||||

return out

|

||||

}

|

||||

|

||||

func buildOAuthTokenMap(base map[string]any, tok *oauth2.Token) map[string]any {

|

||||

merged := cloneMap(base)

|

||||

if merged == nil {

|

||||

merged = make(map[string]any)

|

||||

}

|

||||

if tok == nil {

|

||||

return merged

|

||||

}

|

||||

if raw, errMarshal := json.Marshal(tok); errMarshal == nil {

|

||||

var tokenMap map[string]any

|

||||

if errUnmarshal := json.Unmarshal(raw, &tokenMap); errUnmarshal == nil {

|

||||

for k, v := range tokenMap {

|

||||

merged[k] = v

|

||||

}

|

||||

}

|

||||

}

|

||||

return merged

|

||||

}

|

||||

|

||||

func buildOAuthTokenFields(tok *oauth2.Token, merged map[string]any) map[string]any {

|

||||

fields := make(map[string]any, 5)

|

||||

if tok != nil && tok.AccessToken != "" {

|

||||

fields["access_token"] = tok.AccessToken

|

||||

}

|

||||

if tok != nil && tok.TokenType != "" {

|

||||

fields["token_type"] = tok.TokenType

|

||||

}

|

||||

if tok != nil && tok.RefreshToken != "" {

|

||||

fields["refresh_token"] = tok.RefreshToken

|

||||

}

|

||||

if tok != nil && !tok.Expiry.IsZero() {

|

||||

fields["expiry"] = tok.Expiry.Format(time.RFC3339)

|

||||

}

|

||||

if len(merged) > 0 {

|

||||

fields["token"] = cloneMap(merged)

|

||||

}

|

||||

return fields

|

||||

}

|

||||

|

||||

func tokenValueFromMetadata(metadata map[string]any) string {

|

||||

if len(metadata) == 0 {

|

||||

return ""

|

||||

}

|

||||

if v, ok := metadata["accessToken"].(string); ok && strings.TrimSpace(v) != "" {

|

||||

return strings.TrimSpace(v)

|

||||

}

|

||||

if v, ok := metadata["access_token"].(string); ok && strings.TrimSpace(v) != "" {

|

||||

return strings.TrimSpace(v)

|

||||

}

|

||||

if tokenRaw, ok := metadata["token"]; ok && tokenRaw != nil {

|

||||

switch typed := tokenRaw.(type) {

|

||||

case string:

|

||||

if v := strings.TrimSpace(typed); v != "" {

|

||||

return v

|

||||

}

|

||||

case map[string]any:

|

||||

if v, ok := typed["access_token"].(string); ok && strings.TrimSpace(v) != "" {

|

||||

return strings.TrimSpace(v)

|

||||

}

|

||||

if v, ok := typed["accessToken"].(string); ok && strings.TrimSpace(v) != "" {

|

||||

return strings.TrimSpace(v)

|

||||

}

|

||||

case map[string]string:

|

||||

if v := strings.TrimSpace(typed["access_token"]); v != "" {

|

||||

return v

|

||||

}

|

||||

if v := strings.TrimSpace(typed["accessToken"]); v != "" {

|

||||

return v

|

||||

}

|

||||

}

|

||||

}

|

||||

if v, ok := metadata["token"].(string); ok && strings.TrimSpace(v) != "" {

|

||||

return strings.TrimSpace(v)

|

||||

}

|

||||

if v, ok := metadata["id_token"].(string); ok && strings.TrimSpace(v) != "" {

|

||||

return strings.TrimSpace(v)

|

||||

}

|

||||

if v, ok := metadata["cookie"].(string); ok && strings.TrimSpace(v) != "" {

|

||||

return strings.TrimSpace(v)

|

||||

}

|

||||

return ""

|

||||

}

|

||||

|

||||

func (h *Handler) authByIndex(authIndex string) *coreauth.Auth {

|

||||

authIndex = strings.TrimSpace(authIndex)

|

||||

if authIndex == "" || h == nil || h.authManager == nil {

|

||||

return nil

|

||||

}

|

||||

auths := h.authManager.List()

|

||||

for _, auth := range auths {

|

||||

if auth == nil {

|

||||

continue

|

||||

}

|

||||

auth.EnsureIndex()

|

||||

if auth.Index == authIndex {

|

||||

return auth

|

||||

}

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

func (h *Handler) apiCallTransport(auth *coreauth.Auth) http.RoundTripper {

|

||||

var proxyCandidates []string

|

||||

if auth != nil {

|

||||

if proxyStr := strings.TrimSpace(auth.ProxyURL); proxyStr != "" {

|

||||

proxyCandidates = append(proxyCandidates, proxyStr)

|

||||

}

|

||||

}

|

||||

if h != nil && h.cfg != nil {

|

||||

if proxyStr := strings.TrimSpace(h.cfg.ProxyURL); proxyStr != "" {

|

||||

proxyCandidates = append(proxyCandidates, proxyStr)

|

||||

}

|

||||

}

|

||||

|

||||

for _, proxyStr := range proxyCandidates {

|

||||

if transport := buildProxyTransport(proxyStr); transport != nil {

|

||||

return transport

|

||||

}

|

||||

}

|

||||

|

||||

transport, ok := http.DefaultTransport.(*http.Transport)

|

||||

if !ok || transport == nil {

|

||||

return &http.Transport{Proxy: nil}

|

||||

}

|

||||

clone := transport.Clone()

|

||||

clone.Proxy = nil

|

||||

return clone

|

||||

}

|

||||

|

||||

func buildProxyTransport(proxyStr string) *http.Transport {

|

||||

proxyStr = strings.TrimSpace(proxyStr)

|

||||

if proxyStr == "" {

|

||||

return nil

|

||||

}

|

||||

|

||||

proxyURL, errParse := url.Parse(proxyStr)

|

||||

if errParse != nil {

|

||||

log.WithError(errParse).Debug("parse proxy URL failed")

|

||||

return nil

|

||||

}

|

||||

if proxyURL.Scheme == "" || proxyURL.Host == "" {

|

||||

log.Debug("proxy URL missing scheme/host")

|

||||

return nil

|

||||

}

|

||||

|

||||

if proxyURL.Scheme == "socks5" {

|

||||

var proxyAuth *proxy.Auth

|

||||

if proxyURL.User != nil {

|

||||

username := proxyURL.User.Username()

|

||||

password, _ := proxyURL.User.Password()

|

||||

proxyAuth = &proxy.Auth{User: username, Password: password}

|

||||

}

|

||||

dialer, errSOCKS5 := proxy.SOCKS5("tcp", proxyURL.Host, proxyAuth, proxy.Direct)

|

||||

if errSOCKS5 != nil {

|

||||

log.WithError(errSOCKS5).Debug("create SOCKS5 dialer failed")

|

||||

return nil

|

||||

}

|

||||

return &http.Transport{

|

||||

Proxy: nil,

|

||||

DialContext: func(ctx context.Context, network, addr string) (net.Conn, error) {

|

||||

return dialer.Dial(network, addr)

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

if proxyURL.Scheme == "http" || proxyURL.Scheme == "https" {

|

||||

return &http.Transport{Proxy: http.ProxyURL(proxyURL)}

|

||||

}

|

||||

|

||||

log.Debugf("unsupported proxy scheme: %s", proxyURL.Scheme)

|

||||

return nil

|

||||

}

|

||||

@@ -427,9 +427,46 @@ func (h *Handler) buildAuthFileEntry(auth *coreauth.Auth) gin.H {

|

||||

log.WithError(err).Warnf("failed to stat auth file %s", path)

|

||||

}

|

||||

}

|

||||

if claims := extractCodexIDTokenClaims(auth); claims != nil {

|

||||

entry["id_token"] = claims

|

||||

}

|

||||

return entry

|

||||

}

|

||||

|

||||

func extractCodexIDTokenClaims(auth *coreauth.Auth) gin.H {

|

||||

if auth == nil || auth.Metadata == nil {

|

||||

return nil

|

||||

}

|

||||

if !strings.EqualFold(strings.TrimSpace(auth.Provider), "codex") {

|

||||

return nil

|

||||

}

|

||||

idTokenRaw, ok := auth.Metadata["id_token"].(string)

|

||||

if !ok {

|

||||

return nil

|

||||

}

|

||||

idToken := strings.TrimSpace(idTokenRaw)

|

||||

if idToken == "" {

|

||||

return nil

|

||||

}

|

||||

claims, err := codex.ParseJWTToken(idToken)

|

||||

if err != nil || claims == nil {

|

||||

return nil

|

||||

}

|

||||

|

||||

result := gin.H{}

|

||||

if v := strings.TrimSpace(claims.CodexAuthInfo.ChatgptAccountID); v != "" {

|

||||

result["chatgpt_account_id"] = v

|

||||

}

|

||||

if v := strings.TrimSpace(claims.CodexAuthInfo.ChatgptPlanType); v != "" {

|

||||

result["plan_type"] = v

|

||||

}

|

||||

|

||||

if len(result) == 0 {

|

||||

return nil

|

||||

}

|

||||

return result

|

||||

}

|

||||

|

||||

func authEmail(auth *coreauth.Auth) string {

|

||||

if auth == nil {

|

||||

return ""

|

||||

|

||||

@@ -597,11 +597,7 @@ func (h *Handler) PutCodexKeys(c *gin.Context) {

|

||||

filtered := make([]config.CodexKey, 0, len(arr))

|

||||

for i := range arr {

|

||||

entry := arr[i]

|

||||

entry.APIKey = strings.TrimSpace(entry.APIKey)

|

||||

entry.BaseURL = strings.TrimSpace(entry.BaseURL)

|

||||

entry.ProxyURL = strings.TrimSpace(entry.ProxyURL)

|

||||

entry.Headers = config.NormalizeHeaders(entry.Headers)

|

||||

entry.ExcludedModels = config.NormalizeExcludedModels(entry.ExcludedModels)

|

||||

normalizeCodexKey(&entry)

|

||||

if entry.BaseURL == "" {

|

||||

continue

|

||||

}

|

||||

@@ -613,12 +609,13 @@ func (h *Handler) PutCodexKeys(c *gin.Context) {

|

||||

}

|

||||

func (h *Handler) PatchCodexKey(c *gin.Context) {

|

||||

type codexKeyPatch struct {

|

||||

APIKey *string `json:"api-key"`

|

||||

Prefix *string `json:"prefix"`

|

||||

BaseURL *string `json:"base-url"`

|

||||

ProxyURL *string `json:"proxy-url"`

|

||||

Headers *map[string]string `json:"headers"`

|

||||

ExcludedModels *[]string `json:"excluded-models"`

|

||||

APIKey *string `json:"api-key"`

|

||||

Prefix *string `json:"prefix"`

|

||||

BaseURL *string `json:"base-url"`

|

||||

ProxyURL *string `json:"proxy-url"`

|

||||

Models *[]config.CodexModel `json:"models"`

|

||||

Headers *map[string]string `json:"headers"`

|

||||

ExcludedModels *[]string `json:"excluded-models"`

|

||||

}

|

||||

var body struct {

|

||||

Index *int `json:"index"`

|

||||

@@ -667,12 +664,16 @@ func (h *Handler) PatchCodexKey(c *gin.Context) {

|

||||

if body.Value.ProxyURL != nil {

|

||||

entry.ProxyURL = strings.TrimSpace(*body.Value.ProxyURL)

|

||||

}

|

||||

if body.Value.Models != nil {

|

||||

entry.Models = append([]config.CodexModel(nil), (*body.Value.Models)...)

|

||||

}

|

||||

if body.Value.Headers != nil {

|

||||

entry.Headers = config.NormalizeHeaders(*body.Value.Headers)

|

||||

}

|

||||

if body.Value.ExcludedModels != nil {

|

||||

entry.ExcludedModels = config.NormalizeExcludedModels(*body.Value.ExcludedModels)

|

||||

}

|

||||

normalizeCodexKey(&entry)

|

||||

h.cfg.CodexKey[targetIndex] = entry

|

||||

h.cfg.SanitizeCodexKeys()

|

||||

h.persist(c)

|

||||

@@ -762,6 +763,32 @@ func normalizeClaudeKey(entry *config.ClaudeKey) {

|

||||

entry.Models = normalized

|

||||

}

|

||||

|

||||

func normalizeCodexKey(entry *config.CodexKey) {

|

||||

if entry == nil {

|

||||

return

|

||||

}

|

||||

entry.APIKey = strings.TrimSpace(entry.APIKey)

|

||||

entry.Prefix = strings.TrimSpace(entry.Prefix)

|

||||

entry.BaseURL = strings.TrimSpace(entry.BaseURL)

|

||||

entry.ProxyURL = strings.TrimSpace(entry.ProxyURL)

|

||||

entry.Headers = config.NormalizeHeaders(entry.Headers)

|

||||

entry.ExcludedModels = config.NormalizeExcludedModels(entry.ExcludedModels)

|

||||

if len(entry.Models) == 0 {

|

||||

return

|

||||

}

|

||||

normalized := make([]config.CodexModel, 0, len(entry.Models))

|

||||

for i := range entry.Models {

|

||||

model := entry.Models[i]

|

||||

model.Name = strings.TrimSpace(model.Name)

|

||||

model.Alias = strings.TrimSpace(model.Alias)

|

||||

if model.Name == "" && model.Alias == "" {

|

||||

continue

|

||||

}

|

||||

normalized = append(normalized, model)

|

||||

}

|

||||

entry.Models = normalized

|

||||

}

|

||||

|

||||

// GetAmpCode returns the complete ampcode configuration.

|

||||

func (h *Handler) GetAmpCode(c *gin.Context) {

|

||||

if h == nil || h.cfg == nil {

|

||||

@@ -913,3 +940,151 @@ func (h *Handler) GetAmpForceModelMappings(c *gin.Context) {

|

||||

func (h *Handler) PutAmpForceModelMappings(c *gin.Context) {

|

||||

h.updateBoolField(c, func(v bool) { h.cfg.AmpCode.ForceModelMappings = v })

|

||||

}

|

||||

|

||||

// GetAmpUpstreamAPIKeys returns the ampcode upstream API keys mapping.

|

||||

func (h *Handler) GetAmpUpstreamAPIKeys(c *gin.Context) {

|

||||

if h == nil || h.cfg == nil {

|

||||

c.JSON(200, gin.H{"upstream-api-keys": []config.AmpUpstreamAPIKeyEntry{}})

|

||||

return

|

||||

}

|

||||

c.JSON(200, gin.H{"upstream-api-keys": h.cfg.AmpCode.UpstreamAPIKeys})

|

||||

}

|

||||

|

||||

// PutAmpUpstreamAPIKeys replaces all ampcode upstream API keys mappings.

|

||||

func (h *Handler) PutAmpUpstreamAPIKeys(c *gin.Context) {

|

||||

var body struct {

|

||||

Value []config.AmpUpstreamAPIKeyEntry `json:"value"`

|

||||

}

|

||||

if err := c.ShouldBindJSON(&body); err != nil {

|

||||

c.JSON(400, gin.H{"error": "invalid body"})

|

||||

return

|

||||

}

|

||||

// Normalize entries: trim whitespace, filter empty

|

||||

normalized := normalizeAmpUpstreamAPIKeyEntries(body.Value)

|

||||

h.cfg.AmpCode.UpstreamAPIKeys = normalized

|

||||

h.persist(c)

|

||||

}

|

||||

|

||||

// PatchAmpUpstreamAPIKeys adds or updates upstream API keys entries.

|

||||

// Matching is done by upstream-api-key value.

|

||||

func (h *Handler) PatchAmpUpstreamAPIKeys(c *gin.Context) {

|

||||

var body struct {

|

||||

Value []config.AmpUpstreamAPIKeyEntry `json:"value"`

|

||||

}

|

||||

if err := c.ShouldBindJSON(&body); err != nil {

|

||||

c.JSON(400, gin.H{"error": "invalid body"})

|

||||

return

|

||||

}

|

||||

|

||||

existing := make(map[string]int)

|

||||

for i, entry := range h.cfg.AmpCode.UpstreamAPIKeys {

|

||||

existing[strings.TrimSpace(entry.UpstreamAPIKey)] = i

|

||||

}

|

||||

|

||||

for _, newEntry := range body.Value {

|

||||

upstreamKey := strings.TrimSpace(newEntry.UpstreamAPIKey)

|

||||

if upstreamKey == "" {

|

||||

continue

|

||||

}

|

||||

normalizedEntry := config.AmpUpstreamAPIKeyEntry{

|

||||

UpstreamAPIKey: upstreamKey,

|

||||

APIKeys: normalizeAPIKeysList(newEntry.APIKeys),

|

||||

}

|

||||

if idx, ok := existing[upstreamKey]; ok {

|

||||

h.cfg.AmpCode.UpstreamAPIKeys[idx] = normalizedEntry

|

||||

} else {

|

||||

h.cfg.AmpCode.UpstreamAPIKeys = append(h.cfg.AmpCode.UpstreamAPIKeys, normalizedEntry)

|

||||

existing[upstreamKey] = len(h.cfg.AmpCode.UpstreamAPIKeys) - 1

|

||||

}

|

||||

}

|

||||

h.persist(c)

|

||||

}

|

||||

|

||||

// DeleteAmpUpstreamAPIKeys removes specified upstream API keys entries.

|

||||

// Body must be JSON: {"value": ["<upstream-api-key>", ...]}.

|

||||

// If "value" is an empty array, clears all entries.

|

||||

// If JSON is invalid or "value" is missing/null, returns 400 and does not persist any change.

|

||||

func (h *Handler) DeleteAmpUpstreamAPIKeys(c *gin.Context) {

|

||||

var body struct {

|

||||

Value []string `json:"value"`

|

||||

}

|

||||

if err := c.ShouldBindJSON(&body); err != nil {

|

||||

c.JSON(400, gin.H{"error": "invalid body"})

|

||||

return

|

||||

}

|

||||

|

||||

if body.Value == nil {

|

||||

c.JSON(400, gin.H{"error": "missing value"})

|

||||

return

|

||||

}

|

||||

|

||||

// Empty array means clear all

|

||||

if len(body.Value) == 0 {

|

||||

h.cfg.AmpCode.UpstreamAPIKeys = nil

|

||||

h.persist(c)

|

||||

return

|

||||

}

|

||||

|

||||

toRemove := make(map[string]bool)

|

||||

for _, key := range body.Value {

|

||||

trimmed := strings.TrimSpace(key)

|

||||

if trimmed == "" {

|

||||

continue

|

||||

}

|

||||

toRemove[trimmed] = true

|

||||

}

|

||||

if len(toRemove) == 0 {

|

||||

c.JSON(400, gin.H{"error": "empty value"})

|

||||

return

|

||||

}

|

||||

|

||||

newEntries := make([]config.AmpUpstreamAPIKeyEntry, 0, len(h.cfg.AmpCode.UpstreamAPIKeys))

|

||||

for _, entry := range h.cfg.AmpCode.UpstreamAPIKeys {

|

||||

if !toRemove[strings.TrimSpace(entry.UpstreamAPIKey)] {

|

||||

newEntries = append(newEntries, entry)

|

||||

}

|

||||

}

|

||||

h.cfg.AmpCode.UpstreamAPIKeys = newEntries

|

||||

h.persist(c)

|

||||

}

|

||||

|

||||

// normalizeAmpUpstreamAPIKeyEntries normalizes a list of upstream API key entries.

|

||||

func normalizeAmpUpstreamAPIKeyEntries(entries []config.AmpUpstreamAPIKeyEntry) []config.AmpUpstreamAPIKeyEntry {

|

||||

if len(entries) == 0 {

|

||||

return nil

|

||||

}

|

||||

out := make([]config.AmpUpstreamAPIKeyEntry, 0, len(entries))

|

||||

for _, entry := range entries {

|

||||

upstreamKey := strings.TrimSpace(entry.UpstreamAPIKey)

|

||||

if upstreamKey == "" {

|

||||

continue

|

||||

}

|

||||

apiKeys := normalizeAPIKeysList(entry.APIKeys)

|

||||

out = append(out, config.AmpUpstreamAPIKeyEntry{

|

||||

UpstreamAPIKey: upstreamKey,

|

||||

APIKeys: apiKeys,

|

||||

})

|

||||

}

|

||||

if len(out) == 0 {

|

||||

return nil

|

||||

}

|

||||

return out

|

||||

}

|

||||

|

||||

// normalizeAPIKeysList trims and filters empty strings from a list of API keys.

|

||||

func normalizeAPIKeysList(keys []string) []string {

|

||||

if len(keys) == 0 {

|

||||

return nil

|

||||

}

|

||||

out := make([]string, 0, len(keys))

|

||||

for _, k := range keys {

|

||||

trimmed := strings.TrimSpace(k)

|

||||

if trimmed != "" {

|

||||

out = append(out, trimmed)

|

||||

}

|

||||

}

|

||||

if len(out) == 0 {

|

||||

return nil

|

||||

}

|

||||

return out

|

||||

}

|

||||

|

||||

@@ -59,6 +59,11 @@ func NewHandler(cfg *config.Config, configFilePath string, manager *coreauth.Man

|

||||

}

|

||||

}

|

||||

|

||||

// NewHandler creates a new management handler instance.

|

||||

func NewHandlerWithoutConfigFilePath(cfg *config.Config, manager *coreauth.Manager) *Handler {

|

||||

return NewHandler(cfg, "", manager)

|

||||

}

|

||||

|

||||

// SetConfig updates the in-memory config reference when the server hot-reloads.

|

||||

func (h *Handler) SetConfig(cfg *config.Config) { h.cfg = cfg }

|

||||

|

||||

|

||||

@@ -209,6 +209,94 @@ func (h *Handler) GetRequestErrorLogs(c *gin.Context) {

|

||||

c.JSON(http.StatusOK, gin.H{"files": files})

|

||||

}

|

||||

|

||||

// GetRequestLogByID finds and downloads a request log file by its request ID.

|

||||

// The ID is matched against the suffix of log file names (format: *-{requestID}.log).

|

||||

func (h *Handler) GetRequestLogByID(c *gin.Context) {

|

||||

if h == nil {

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": "handler unavailable"})

|

||||

return

|

||||

}

|

||||

if h.cfg == nil {

|

||||

c.JSON(http.StatusServiceUnavailable, gin.H{"error": "configuration unavailable"})

|

||||

return

|

||||

}

|

||||

|

||||

dir := h.logDirectory()

|

||||

if strings.TrimSpace(dir) == "" {

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": "log directory not configured"})

|

||||

return

|

||||

}

|

||||

|

||||

requestID := strings.TrimSpace(c.Param("id"))

|

||||

if requestID == "" {

|

||||

requestID = strings.TrimSpace(c.Query("id"))

|

||||

}

|

||||

if requestID == "" {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "missing request ID"})

|

||||

return

|

||||

}

|

||||

if strings.ContainsAny(requestID, "/\\") {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid request ID"})

|

||||

return

|

||||

}

|

||||

|

||||

entries, err := os.ReadDir(dir)

|

||||

if err != nil {

|

||||

if os.IsNotExist(err) {

|

||||

c.JSON(http.StatusNotFound, gin.H{"error": "log directory not found"})

|

||||

return

|

||||

}

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": fmt.Sprintf("failed to list log directory: %v", err)})

|

||||

return

|

||||

}

|

||||

|

||||

suffix := "-" + requestID + ".log"

|

||||

var matchedFile string

|

||||

for _, entry := range entries {

|

||||

if entry.IsDir() {

|

||||

continue

|

||||

}

|

||||

name := entry.Name()

|

||||

if strings.HasSuffix(name, suffix) {

|

||||

matchedFile = name

|

||||

break

|

||||

}

|

||||

}

|

||||

|

||||

if matchedFile == "" {

|

||||

c.JSON(http.StatusNotFound, gin.H{"error": "log file not found for the given request ID"})

|

||||

return

|

||||

}

|

||||

|

||||

dirAbs, errAbs := filepath.Abs(dir)

|

||||

if errAbs != nil {

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": fmt.Sprintf("failed to resolve log directory: %v", errAbs)})

|

||||

return

|

||||

}

|

||||

fullPath := filepath.Clean(filepath.Join(dirAbs, matchedFile))

|

||||

prefix := dirAbs + string(os.PathSeparator)

|

||||

if !strings.HasPrefix(fullPath, prefix) {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid log file path"})

|

||||

return

|

||||

}

|

||||

|

||||

info, errStat := os.Stat(fullPath)

|

||||

if errStat != nil {

|

||||

if os.IsNotExist(errStat) {

|

||||

c.JSON(http.StatusNotFound, gin.H{"error": "log file not found"})

|

||||

return

|

||||

}

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": fmt.Sprintf("failed to read log file: %v", errStat)})

|

||||

return

|

||||

}

|

||||

if info.IsDir() {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid log file"})

|

||||

return

|

||||

}

|

||||

|

||||

c.FileAttachment(fullPath, matchedFile)

|

||||

}

|

||||

|

||||

// DownloadRequestErrorLog downloads a specific error request log file by name.

|

||||

func (h *Handler) DownloadRequestErrorLog(c *gin.Context) {

|

||||

if h == nil {

|

||||

|

||||

@@ -1,12 +1,25 @@

|

||||

package management

|

||||

|

||||

import (

|

||||

"encoding/json"

|

||||

"net/http"

|

||||

"time"

|

||||

|

||||

"github.com/gin-gonic/gin"

|

||||

"github.com/router-for-me/CLIProxyAPI/v6/internal/usage"

|

||||

)

|

||||

|

||||

type usageExportPayload struct {

|

||||

Version int `json:"version"`

|

||||

ExportedAt time.Time `json:"exported_at"`

|

||||

Usage usage.StatisticsSnapshot `json:"usage"`

|

||||

}

|

||||

|

||||

type usageImportPayload struct {

|

||||

Version int `json:"version"`

|

||||

Usage usage.StatisticsSnapshot `json:"usage"`

|

||||

}

|

||||

|

||||

// GetUsageStatistics returns the in-memory request statistics snapshot.

|

||||

func (h *Handler) GetUsageStatistics(c *gin.Context) {

|

||||

var snapshot usage.StatisticsSnapshot

|

||||

@@ -18,3 +31,49 @@ func (h *Handler) GetUsageStatistics(c *gin.Context) {

|

||||

"failed_requests": snapshot.FailureCount,

|

||||

})

|

||||

}

|

||||

|

||||

// ExportUsageStatistics returns a complete usage snapshot for backup/migration.

|

||||

func (h *Handler) ExportUsageStatistics(c *gin.Context) {

|

||||

var snapshot usage.StatisticsSnapshot

|

||||

if h != nil && h.usageStats != nil {

|

||||

snapshot = h.usageStats.Snapshot()

|

||||

}

|

||||

c.JSON(http.StatusOK, usageExportPayload{

|

||||

Version: 1,

|

||||

ExportedAt: time.Now().UTC(),

|

||||

Usage: snapshot,

|

||||

})

|

||||

}

|

||||

|

||||

// ImportUsageStatistics merges a previously exported usage snapshot into memory.

|

||||

func (h *Handler) ImportUsageStatistics(c *gin.Context) {

|

||||

if h == nil || h.usageStats == nil {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "usage statistics unavailable"})

|

||||

return

|

||||

}

|

||||

|

||||

data, err := c.GetRawData()

|

||||

if err != nil {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "failed to read request body"})

|

||||

return

|

||||

}

|

||||

|

||||

var payload usageImportPayload

|

||||

if err := json.Unmarshal(data, &payload); err != nil {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid json"})

|

||||

return

|

||||

}

|

||||

if payload.Version != 0 && payload.Version != 1 {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "unsupported version"})

|

||||

return

|

||||

}

|

||||

|

||||

result := h.usageStats.MergeSnapshot(payload.Usage)

|

||||

snapshot := h.usageStats.Snapshot()

|

||||

c.JSON(http.StatusOK, gin.H{

|

||||

"added": result.Added,

|

||||

"skipped": result.Skipped,

|

||||

"total_requests": snapshot.TotalRequests,

|

||||

"failed_requests": snapshot.FailureCount,

|

||||

})

|

||||

}

|

||||

|

||||

@@ -98,10 +98,11 @@ func captureRequestInfo(c *gin.Context) (*RequestInfo, error) {

|

||||

}

|

||||

|

||||

return &RequestInfo{

|

||||

URL: url,

|

||||

Method: method,

|

||||

Headers: headers,

|

||||

Body: body,

|

||||

URL: url,

|

||||

Method: method,

|

||||

Headers: headers,

|

||||

Body: body,

|

||||

RequestID: logging.GetGinRequestID(c),

|

||||

}, nil

|

||||

}

|

||||

|

||||

|

||||

@@ -15,10 +15,11 @@ import (

|

||||

|

||||

// RequestInfo holds essential details of an incoming HTTP request for logging purposes.

|

||||

type RequestInfo struct {

|

||||

URL string // URL is the request URL.

|

||||

Method string // Method is the HTTP method (e.g., GET, POST).

|

||||

Headers map[string][]string // Headers contains the request headers.

|

||||

Body []byte // Body is the raw request body.

|

||||

URL string // URL is the request URL.

|

||||

Method string // Method is the HTTP method (e.g., GET, POST).

|

||||

Headers map[string][]string // Headers contains the request headers.

|

||||

Body []byte // Body is the raw request body.

|

||||

RequestID string // RequestID is the unique identifier for the request.

|

||||

}

|

||||

|

||||

// ResponseWriterWrapper wraps the standard gin.ResponseWriter to intercept and log response data.

|

||||

@@ -149,6 +150,7 @@ func (w *ResponseWriterWrapper) WriteHeader(statusCode int) {

|

||||

w.requestInfo.Method,

|

||||

w.requestInfo.Headers,

|

||||

w.requestInfo.Body,

|

||||

w.requestInfo.RequestID,

|

||||

)

|

||||

if err == nil {

|

||||

w.streamWriter = streamWriter

|

||||