mirror of

https://github.com/router-for-me/CLIProxyAPI.git

synced 2026-02-04 05:20:52 +08:00

Compare commits

45 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

33e53a2a56 | ||

|

|

cd5b80785f | ||

|

|

54f71aa273 | ||

|

|

3f949b7f84 | ||

|

|

443c4538bb | ||

|

|

a7fc2ee4cf | ||

|

|

8e749ac22d | ||

|

|

69e09d9bc7 | ||

|

|

06ad527e8c | ||

|

|

b7409dd2de | ||

|

|

5ba325a8fc | ||

|

|

d502840f91 | ||

|

|

99238a4b59 | ||

|

|

6d43a2ff9a | ||

|

|

3faa1ca9af | ||

|

|

9d975e0375 | ||

|

|

2a6d8b78d4 | ||

|

|

671558a822 | ||

|

|

26fbb77901 | ||

|

|

a277302262 | ||

|

|

969c1a5b72 | ||

|

|

872339bceb | ||

|

|

5dc0dbc7aa | ||

|

|

2b7ba54a2f | ||

|

|

007c3304f2 | ||

|

|

e76ba0ede9 | ||

|

|

c06ac07e23 | ||

|

|

66769ec657 | ||

|

|

f413feec61 | ||

|

|

2e538e3486 | ||

|

|

9617a7b0d6 | ||

|

|

7569320770 | ||

|

|

8d25cf0d75 | ||

|

|

64e85e7019 | ||

|

|

0c0aae1eac | ||

|

|

5dcf7cb846 | ||

|

|

5bf89dd757 | ||

|

|

4442574e53 | ||

|

|

71a6dffbb6 | ||

|

|

24e8e20b59 | ||

|

|

a87f09bad2 | ||

|

|

bc6c4cdbfc | ||

|

|

404546ce93 | ||

|

|

6dd1cf1dd6 | ||

|

|

9058d406a3 |

@@ -10,11 +10,11 @@ So you can use local or multi-account CLI access with OpenAI(include Responses)/

|

||||

|

||||

## Sponsor

|

||||

|

||||

[](https://z.ai/subscribe?ic=8JVLJQFSKB)

|

||||

[](https://z.ai/subscribe?ic=8JVLJQFSKB)

|

||||

|

||||

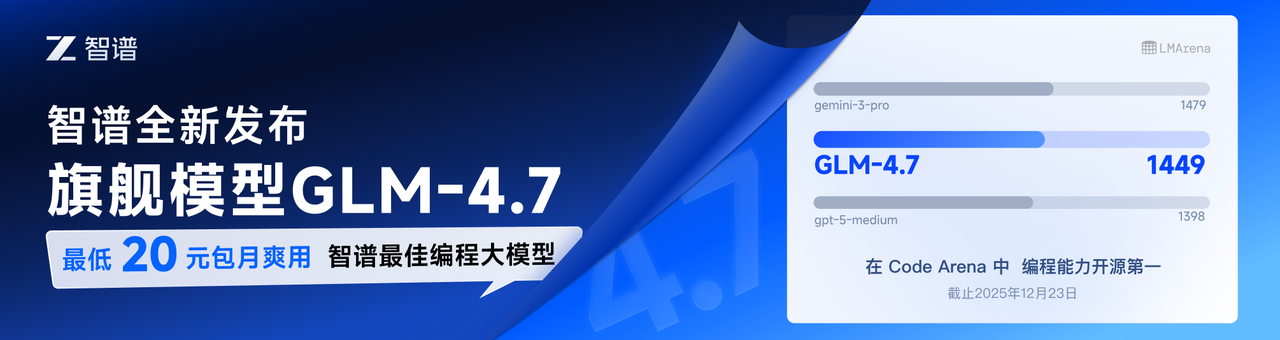

This project is sponsored by Z.ai, supporting us with their GLM CODING PLAN.

|

||||

|

||||

GLM CODING PLAN is a subscription service designed for AI coding, starting at just $3/month. It provides access to their flagship GLM-4.6 model across 10+ popular AI coding tools (Claude Code, Cline, Roo Code, etc.), offering developers top-tier, fast, and stable coding experiences.

|

||||

GLM CODING PLAN is a subscription service designed for AI coding, starting at just $3/month. It provides access to their flagship GLM-4.7 model across 10+ popular AI coding tools (Claude Code, Cline, Roo Code, etc.), offering developers top-tier, fast, and stable coding experiences.

|

||||

|

||||

Get 10% OFF GLM CODING PLAN:https://z.ai/subscribe?ic=8JVLJQFSKB

|

||||

|

||||

@@ -26,6 +26,10 @@ Get 10% OFF GLM CODING PLAN:https://z.ai/subscribe?ic=8JVLJQFSKB

|

||||

<td width="180"><a href="https://www.packyapi.com/register?aff=cliproxyapi"><img src="./assets/packycode.png" alt="PackyCode" width="150"></a></td>

|

||||

<td>Thanks to PackyCode for sponsoring this project! PackyCode is a reliable and efficient API relay service provider, offering relay services for Claude Code, Codex, Gemini, and more. PackyCode provides special discounts for our software users: register using <a href="https://www.packyapi.com/register?aff=cliproxyapi">this link</a> and enter the "cliproxyapi" promo code during recharge to get 10% off.</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="180"><a href="https://cubence.com/signup?code=CLIPROXYAPI&source=cpa"><img src="./assets/cubence.png" alt="Cubence" width="150"></a></td>

|

||||

<td>Thanks to Cubence for sponsoring this project! Cubence is a reliable and efficient API relay service provider, offering relay services for Claude Code, Codex, Gemini, and more. Cubence provides special discounts for our software users: register using <a href="https://cubence.com/signup?code=CLIPROXYAPI&source=cpa">this link</a> and enter the "CLIPROXYAPI" promo code during recharge to get 10% off.</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|

||||

|

||||

@@ -10,11 +10,11 @@

|

||||

|

||||

## 赞助商

|

||||

|

||||

[](https://www.bigmodel.cn/claude-code?ic=RRVJPB5SII)

|

||||

[](https://www.bigmodel.cn/claude-code?ic=RRVJPB5SII)

|

||||

|

||||

本项目由 Z智谱 提供赞助, 他们通过 GLM CODING PLAN 对本项目提供技术支持。

|

||||

|

||||

GLM CODING PLAN 是专为AI编码打造的订阅套餐,每月最低仅需20元,即可在十余款主流AI编码工具如 Claude Code、Cline、Roo Code 中畅享智谱旗舰模型GLM-4.6,为开发者提供顶尖的编码体验。

|

||||

GLM CODING PLAN 是专为AI编码打造的订阅套餐,每月最低仅需20元,即可在十余款主流AI编码工具如 Claude Code、Cline、Roo Code 中畅享智谱旗舰模型GLM-4.7,为开发者提供顶尖的编码体验。

|

||||

|

||||

智谱AI为本软件提供了特别优惠,使用以下链接购买可以享受九折优惠:https://www.bigmodel.cn/claude-code?ic=RRVJPB5SII

|

||||

|

||||

@@ -26,9 +26,14 @@ GLM CODING PLAN 是专为AI编码打造的订阅套餐,每月最低仅需20元

|

||||

<td width="180"><a href="https://www.packyapi.com/register?aff=cliproxyapi"><img src="./assets/packycode.png" alt="PackyCode" width="150"></a></td>

|

||||

<td>感谢 PackyCode 对本项目的赞助!PackyCode 是一家可靠高效的 API 中转服务商,提供 Claude Code、Codex、Gemini 等多种服务的中转。PackyCode 为本软件用户提供了特别优惠:使用<a href="https://www.packyapi.com/register?aff=cliproxyapi">此链接</a>注册,并在充值时输入 "cliproxyapi" 优惠码即可享受九折优惠。</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="180"><a href="https://cubence.com/signup?code=CLIPROXYAPI&source=cpa"><img src="./assets/cubence.png" alt="Cubence" width="150"></a></td>

|

||||

<td>感谢 Cubence 对本项目的赞助!Cubence 是一家可靠高效的 API 中转服务商,提供 Claude Code、Codex、Gemini 等多种服务的中转。Cubence 为本软件用户提供了特别优惠:使用<a href="https://cubence.com/signup?code=CLIPROXYAPI&source=cpa">此链接</a>注册,并在充值时输入 "CLIPROXYAPI" 优惠码即可享受九折优惠。</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|

||||

|

||||

## 功能特性

|

||||

|

||||

- 为 CLI 模型提供 OpenAI/Gemini/Claude/Codex 兼容的 API 端点

|

||||

|

||||

BIN

assets/cubence.png

Normal file

BIN

assets/cubence.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 51 KiB |

@@ -39,6 +39,9 @@ api-keys:

|

||||

# Enable debug logging

|

||||

debug: false

|

||||

|

||||

# When true, disable high-overhead HTTP middleware features to reduce per-request memory usage under high concurrency.

|

||||

commercial-mode: false

|

||||

|

||||

# When true, write application logs to rotating files instead of stdout

|

||||

logging-to-file: false

|

||||

|

||||

@@ -73,6 +76,11 @@ routing:

|

||||

# When true, enable authentication for the WebSocket API (/v1/ws).

|

||||

ws-auth: false

|

||||

|

||||

# Streaming behavior (SSE keep-alives + safe bootstrap retries).

|

||||

# streaming:

|

||||

# keepalive-seconds: 15 # Default: 0 (disabled). <= 0 disables keep-alives.

|

||||

# bootstrap-retries: 1 # Default: 0 (disabled). Retries before first byte is sent.

|

||||

|

||||

# Gemini API keys

|

||||

# gemini-api-key:

|

||||

# - api-key: "AIzaSy...01"

|

||||

|

||||

@@ -209,6 +209,94 @@ func (h *Handler) GetRequestErrorLogs(c *gin.Context) {

|

||||

c.JSON(http.StatusOK, gin.H{"files": files})

|

||||

}

|

||||

|

||||

// GetRequestLogByID finds and downloads a request log file by its request ID.

|

||||

// The ID is matched against the suffix of log file names (format: *-{requestID}.log).

|

||||

func (h *Handler) GetRequestLogByID(c *gin.Context) {

|

||||

if h == nil {

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": "handler unavailable"})

|

||||

return

|

||||

}

|

||||

if h.cfg == nil {

|

||||

c.JSON(http.StatusServiceUnavailable, gin.H{"error": "configuration unavailable"})

|

||||

return

|

||||

}

|

||||

|

||||

dir := h.logDirectory()

|

||||

if strings.TrimSpace(dir) == "" {

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": "log directory not configured"})

|

||||

return

|

||||

}

|

||||

|

||||

requestID := strings.TrimSpace(c.Param("id"))

|

||||

if requestID == "" {

|

||||

requestID = strings.TrimSpace(c.Query("id"))

|

||||

}

|

||||

if requestID == "" {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "missing request ID"})

|

||||

return

|

||||

}

|

||||

if strings.ContainsAny(requestID, "/\\") {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid request ID"})

|

||||

return

|

||||

}

|

||||

|

||||

entries, err := os.ReadDir(dir)

|

||||

if err != nil {

|

||||

if os.IsNotExist(err) {

|

||||

c.JSON(http.StatusNotFound, gin.H{"error": "log directory not found"})

|

||||

return

|

||||

}

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": fmt.Sprintf("failed to list log directory: %v", err)})

|

||||

return

|

||||

}

|

||||

|

||||

suffix := "-" + requestID + ".log"

|

||||

var matchedFile string

|

||||

for _, entry := range entries {

|

||||

if entry.IsDir() {

|

||||

continue

|

||||

}

|

||||

name := entry.Name()

|

||||

if strings.HasSuffix(name, suffix) {

|

||||

matchedFile = name

|

||||

break

|

||||

}

|

||||

}

|

||||

|

||||

if matchedFile == "" {

|

||||

c.JSON(http.StatusNotFound, gin.H{"error": "log file not found for the given request ID"})

|

||||

return

|

||||

}

|

||||

|

||||

dirAbs, errAbs := filepath.Abs(dir)

|

||||

if errAbs != nil {

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": fmt.Sprintf("failed to resolve log directory: %v", errAbs)})

|

||||

return

|

||||

}

|

||||

fullPath := filepath.Clean(filepath.Join(dirAbs, matchedFile))

|

||||

prefix := dirAbs + string(os.PathSeparator)

|

||||

if !strings.HasPrefix(fullPath, prefix) {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid log file path"})

|

||||

return

|

||||

}

|

||||

|

||||

info, errStat := os.Stat(fullPath)

|

||||

if errStat != nil {

|

||||

if os.IsNotExist(errStat) {

|

||||

c.JSON(http.StatusNotFound, gin.H{"error": "log file not found"})

|

||||

return

|

||||

}

|

||||

c.JSON(http.StatusInternalServerError, gin.H{"error": fmt.Sprintf("failed to read log file: %v", errStat)})

|

||||

return

|

||||

}

|

||||

if info.IsDir() {

|

||||

c.JSON(http.StatusBadRequest, gin.H{"error": "invalid log file"})

|

||||

return

|

||||

}

|

||||

|

||||

c.FileAttachment(fullPath, matchedFile)

|

||||

}

|

||||

|

||||

// DownloadRequestErrorLog downloads a specific error request log file by name.

|

||||

func (h *Handler) DownloadRequestErrorLog(c *gin.Context) {

|

||||

if h == nil {

|

||||

|

||||

@@ -98,10 +98,11 @@ func captureRequestInfo(c *gin.Context) (*RequestInfo, error) {

|

||||

}

|

||||

|

||||

return &RequestInfo{

|

||||

URL: url,

|

||||

Method: method,

|

||||

Headers: headers,

|

||||

Body: body,

|

||||

URL: url,

|

||||

Method: method,

|

||||

Headers: headers,

|

||||

Body: body,

|

||||

RequestID: logging.GetGinRequestID(c),

|

||||

}, nil

|

||||

}

|

||||

|

||||

|

||||

@@ -15,10 +15,11 @@ import (

|

||||

|

||||

// RequestInfo holds essential details of an incoming HTTP request for logging purposes.

|

||||

type RequestInfo struct {

|

||||

URL string // URL is the request URL.

|

||||

Method string // Method is the HTTP method (e.g., GET, POST).

|

||||

Headers map[string][]string // Headers contains the request headers.

|

||||

Body []byte // Body is the raw request body.

|

||||

URL string // URL is the request URL.

|

||||

Method string // Method is the HTTP method (e.g., GET, POST).

|

||||

Headers map[string][]string // Headers contains the request headers.

|

||||

Body []byte // Body is the raw request body.

|

||||

RequestID string // RequestID is the unique identifier for the request.

|

||||

}

|

||||

|

||||

// ResponseWriterWrapper wraps the standard gin.ResponseWriter to intercept and log response data.

|

||||

@@ -149,6 +150,7 @@ func (w *ResponseWriterWrapper) WriteHeader(statusCode int) {

|

||||

w.requestInfo.Method,

|

||||

w.requestInfo.Headers,

|

||||

w.requestInfo.Body,

|

||||

w.requestInfo.RequestID,

|

||||

)

|

||||

if err == nil {

|

||||

w.streamWriter = streamWriter

|

||||

@@ -346,7 +348,7 @@ func (w *ResponseWriterWrapper) logRequest(statusCode int, headers map[string][]

|

||||

}

|

||||

|

||||

if loggerWithOptions, ok := w.logger.(interface {

|

||||

LogRequestWithOptions(string, string, map[string][]string, []byte, int, map[string][]string, []byte, []byte, []byte, []*interfaces.ErrorMessage, bool) error

|

||||

LogRequestWithOptions(string, string, map[string][]string, []byte, int, map[string][]string, []byte, []byte, []byte, []*interfaces.ErrorMessage, bool, string) error

|

||||

}); ok {

|

||||

return loggerWithOptions.LogRequestWithOptions(

|

||||

w.requestInfo.URL,

|

||||

@@ -360,6 +362,7 @@ func (w *ResponseWriterWrapper) logRequest(statusCode int, headers map[string][]

|

||||

apiResponseBody,

|

||||

apiResponseErrors,

|

||||

forceLog,

|

||||

w.requestInfo.RequestID,

|

||||

)

|

||||

}

|

||||

|

||||

@@ -374,5 +377,6 @@ func (w *ResponseWriterWrapper) logRequest(statusCode int, headers map[string][]

|

||||

apiRequestBody,

|

||||

apiResponseBody,

|

||||

apiResponseErrors,

|

||||

w.requestInfo.RequestID,

|

||||

)

|

||||

}

|

||||

|

||||

@@ -279,16 +279,23 @@ func (m *AmpModule) hasModelMappingsChanged(old *config.AmpCode, new *config.Amp

|

||||

return true

|

||||

}

|

||||

|

||||

// Build map for efficient comparison

|

||||

oldMap := make(map[string]string, len(old.ModelMappings))

|

||||

// Build map for efficient and robust comparison

|

||||

type mappingInfo struct {

|

||||

to string

|

||||

regex bool

|

||||

}

|

||||

oldMap := make(map[string]mappingInfo, len(old.ModelMappings))

|

||||

for _, mapping := range old.ModelMappings {

|

||||

oldMap[strings.TrimSpace(mapping.From)] = strings.TrimSpace(mapping.To)

|

||||

oldMap[strings.TrimSpace(mapping.From)] = mappingInfo{

|

||||

to: strings.TrimSpace(mapping.To),

|

||||

regex: mapping.Regex,

|

||||

}

|

||||

}

|

||||

|

||||

for _, mapping := range new.ModelMappings {

|

||||

from := strings.TrimSpace(mapping.From)

|

||||

to := strings.TrimSpace(mapping.To)

|

||||

if oldTo, exists := oldMap[from]; !exists || oldTo != to {

|

||||

if oldVal, exists := oldMap[from]; !exists || oldVal.to != to || oldVal.regex != mapping.Regex {

|

||||

return true

|

||||

}

|

||||

}

|

||||

|

||||

@@ -3,6 +3,7 @@

|

||||

package amp

|

||||

|

||||

import (

|

||||

"regexp"

|

||||

"strings"

|

||||

"sync"

|

||||

|

||||

@@ -26,13 +27,15 @@ type ModelMapper interface {

|

||||

// DefaultModelMapper implements ModelMapper with thread-safe mapping storage.

|

||||

type DefaultModelMapper struct {

|

||||

mu sync.RWMutex

|

||||

mappings map[string]string // from -> to (normalized lowercase keys)

|

||||

mappings map[string]string // exact: from -> to (normalized lowercase keys)

|

||||

regexps []regexMapping // regex rules evaluated in order

|

||||

}

|

||||

|

||||

// NewModelMapper creates a new model mapper with the given initial mappings.

|

||||

func NewModelMapper(mappings []config.AmpModelMapping) *DefaultModelMapper {

|

||||

m := &DefaultModelMapper{

|

||||

mappings: make(map[string]string),

|

||||

regexps: nil,

|

||||

}

|

||||

m.UpdateMappings(mappings)

|

||||

return m

|

||||

@@ -55,7 +58,18 @@ func (m *DefaultModelMapper) MapModel(requestedModel string) string {

|

||||

// Check for direct mapping

|

||||

targetModel, exists := m.mappings[normalizedRequest]

|

||||

if !exists {

|

||||

return ""

|

||||

// Try regex mappings in order

|

||||

base, _ := util.NormalizeThinkingModel(requestedModel)

|

||||

for _, rm := range m.regexps {

|

||||

if rm.re.MatchString(requestedModel) || (base != "" && rm.re.MatchString(base)) {

|

||||

targetModel = rm.to

|

||||

exists = true

|

||||

break

|

||||

}

|

||||

}

|

||||

if !exists {

|

||||

return ""

|

||||

}

|

||||

}

|

||||

|

||||

// Verify target model has available providers

|

||||

@@ -78,6 +92,7 @@ func (m *DefaultModelMapper) UpdateMappings(mappings []config.AmpModelMapping) {

|

||||

|

||||

// Clear and rebuild mappings

|

||||

m.mappings = make(map[string]string, len(mappings))

|

||||

m.regexps = make([]regexMapping, 0, len(mappings))

|

||||

|

||||

for _, mapping := range mappings {

|

||||

from := strings.TrimSpace(mapping.From)

|

||||

@@ -88,16 +103,30 @@ func (m *DefaultModelMapper) UpdateMappings(mappings []config.AmpModelMapping) {

|

||||

continue

|

||||

}

|

||||

|

||||

// Store with normalized lowercase key for case-insensitive lookup

|

||||

normalizedFrom := strings.ToLower(from)

|

||||

m.mappings[normalizedFrom] = to

|

||||

|

||||

log.Debugf("amp model mapping registered: %s -> %s", from, to)

|

||||

if mapping.Regex {

|

||||

// Compile case-insensitive regex; wrap with (?i) to match behavior of exact lookups

|

||||

pattern := "(?i)" + from

|

||||

re, err := regexp.Compile(pattern)

|

||||

if err != nil {

|

||||

log.Warnf("amp model mapping: invalid regex %q: %v", from, err)

|

||||

continue

|

||||

}

|

||||

m.regexps = append(m.regexps, regexMapping{re: re, to: to})

|

||||

log.Debugf("amp model regex mapping registered: /%s/ -> %s", from, to)

|

||||

} else {

|

||||

// Store with normalized lowercase key for case-insensitive lookup

|

||||

normalizedFrom := strings.ToLower(from)

|

||||

m.mappings[normalizedFrom] = to

|

||||

log.Debugf("amp model mapping registered: %s -> %s", from, to)

|

||||

}

|

||||

}

|

||||

|

||||

if len(m.mappings) > 0 {

|

||||

log.Infof("amp model mapping: loaded %d mapping(s)", len(m.mappings))

|

||||

}

|

||||

if n := len(m.regexps); n > 0 {

|

||||

log.Infof("amp model mapping: loaded %d regex mapping(s)", n)

|

||||

}

|

||||

}

|

||||

|

||||

// GetMappings returns a copy of current mappings (for debugging/status).

|

||||

@@ -111,3 +140,8 @@ func (m *DefaultModelMapper) GetMappings() map[string]string {

|

||||

}

|

||||

return result

|

||||

}

|

||||

|

||||

type regexMapping struct {

|

||||

re *regexp.Regexp

|

||||

to string

|

||||

}

|

||||

|

||||

@@ -203,3 +203,81 @@ func TestModelMapper_GetMappings_ReturnsCopy(t *testing.T) {

|

||||

t.Error("Original map was modified")

|

||||

}

|

||||

}

|

||||

|

||||

func TestModelMapper_Regex_MatchBaseWithoutParens(t *testing.T) {

|

||||

reg := registry.GetGlobalRegistry()

|

||||

reg.RegisterClient("test-client-regex-1", "gemini", []*registry.ModelInfo{

|

||||

{ID: "gemini-2.5-pro", OwnedBy: "google", Type: "gemini"},

|

||||

})

|

||||

defer reg.UnregisterClient("test-client-regex-1")

|

||||

|

||||

mappings := []config.AmpModelMapping{

|

||||

{From: "^gpt-5$", To: "gemini-2.5-pro", Regex: true},

|

||||

}

|

||||

|

||||

mapper := NewModelMapper(mappings)

|

||||

|

||||

// Incoming model has reasoning suffix but should match base via regex

|

||||

result := mapper.MapModel("gpt-5(high)")

|

||||

if result != "gemini-2.5-pro" {

|

||||

t.Errorf("Expected gemini-2.5-pro, got %s", result)

|

||||

}

|

||||

}

|

||||

|

||||

func TestModelMapper_Regex_ExactPrecedence(t *testing.T) {

|

||||

reg := registry.GetGlobalRegistry()

|

||||

reg.RegisterClient("test-client-regex-2", "claude", []*registry.ModelInfo{

|

||||

{ID: "claude-sonnet-4", OwnedBy: "anthropic", Type: "claude"},

|

||||

})

|

||||

reg.RegisterClient("test-client-regex-3", "gemini", []*registry.ModelInfo{

|

||||

{ID: "gemini-2.5-pro", OwnedBy: "google", Type: "gemini"},

|

||||

})

|

||||

defer reg.UnregisterClient("test-client-regex-2")

|

||||

defer reg.UnregisterClient("test-client-regex-3")

|

||||

|

||||

mappings := []config.AmpModelMapping{

|

||||

{From: "gpt-5", To: "claude-sonnet-4"}, // exact

|

||||

{From: "^gpt-5.*$", To: "gemini-2.5-pro", Regex: true}, // regex

|

||||

}

|

||||

|

||||

mapper := NewModelMapper(mappings)

|

||||

|

||||

// Exact match should win over regex

|

||||

result := mapper.MapModel("gpt-5")

|

||||

if result != "claude-sonnet-4" {

|

||||

t.Errorf("Expected claude-sonnet-4, got %s", result)

|

||||

}

|

||||

}

|

||||

|

||||

func TestModelMapper_Regex_InvalidPattern_Skipped(t *testing.T) {

|

||||

// Invalid regex should be skipped and not cause panic

|

||||

mappings := []config.AmpModelMapping{

|

||||

{From: "(", To: "target", Regex: true},

|

||||

}

|

||||

|

||||

mapper := NewModelMapper(mappings)

|

||||

|

||||

result := mapper.MapModel("anything")

|

||||

if result != "" {

|

||||

t.Errorf("Expected empty result due to invalid regex, got %s", result)

|

||||

}

|

||||

}

|

||||

|

||||

func TestModelMapper_Regex_CaseInsensitive(t *testing.T) {

|

||||

reg := registry.GetGlobalRegistry()

|

||||

reg.RegisterClient("test-client-regex-4", "claude", []*registry.ModelInfo{

|

||||

{ID: "claude-sonnet-4", OwnedBy: "anthropic", Type: "claude"},

|

||||

})

|

||||

defer reg.UnregisterClient("test-client-regex-4")

|

||||

|

||||

mappings := []config.AmpModelMapping{

|

||||

{From: "^CLAUDE-OPUS-.*$", To: "claude-sonnet-4", Regex: true},

|

||||

}

|

||||

|

||||

mapper := NewModelMapper(mappings)

|

||||

|

||||

result := mapper.MapModel("claude-opus-4.5")

|

||||

if result != "claude-sonnet-4" {

|

||||

t.Errorf("Expected claude-sonnet-4, got %s", result)

|

||||

}

|

||||

}

|

||||

|

||||

@@ -209,13 +209,15 @@ func NewServer(cfg *config.Config, authManager *auth.Manager, accessManager *sdk

|

||||

// Resolve logs directory relative to the configuration file directory.

|

||||

var requestLogger logging.RequestLogger

|

||||

var toggle func(bool)

|

||||

if optionState.requestLoggerFactory != nil {

|

||||

requestLogger = optionState.requestLoggerFactory(cfg, configFilePath)

|

||||

}

|

||||

if requestLogger != nil {

|

||||

engine.Use(middleware.RequestLoggingMiddleware(requestLogger))

|

||||

if setter, ok := requestLogger.(interface{ SetEnabled(bool) }); ok {

|

||||

toggle = setter.SetEnabled

|

||||

if !cfg.CommercialMode {

|

||||

if optionState.requestLoggerFactory != nil {

|

||||

requestLogger = optionState.requestLoggerFactory(cfg, configFilePath)

|

||||

}

|

||||

if requestLogger != nil {

|

||||

engine.Use(middleware.RequestLoggingMiddleware(requestLogger))

|

||||

if setter, ok := requestLogger.(interface{ SetEnabled(bool) }); ok {

|

||||

toggle = setter.SetEnabled

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -518,6 +520,7 @@ func (s *Server) registerManagementRoutes() {

|

||||

mgmt.DELETE("/logs", s.mgmt.DeleteLogs)

|

||||

mgmt.GET("/request-error-logs", s.mgmt.GetRequestErrorLogs)

|

||||

mgmt.GET("/request-error-logs/:name", s.mgmt.DownloadRequestErrorLog)

|

||||

mgmt.GET("/request-log-by-id/:id", s.mgmt.GetRequestLogByID)

|

||||

mgmt.GET("/request-log", s.mgmt.GetRequestLog)

|

||||

mgmt.PUT("/request-log", s.mgmt.PutRequestLog)

|

||||

mgmt.PATCH("/request-log", s.mgmt.PutRequestLog)

|

||||

|

||||

@@ -39,6 +39,9 @@ type Config struct {

|

||||

// Debug enables or disables debug-level logging and other debug features.

|

||||

Debug bool `yaml:"debug" json:"debug"`

|

||||

|

||||

// CommercialMode disables high-overhead HTTP middleware features to minimize per-request memory usage.

|

||||

CommercialMode bool `yaml:"commercial-mode" json:"commercial-mode"`

|

||||

|

||||

// LoggingToFile controls whether application logs are written to rotating files or stdout.

|

||||

LoggingToFile bool `yaml:"logging-to-file" json:"logging-to-file"`

|

||||

|

||||

@@ -144,6 +147,11 @@ type AmpModelMapping struct {

|

||||

// To is the target model name to route to (e.g., "claude-sonnet-4").

|

||||

// The target model must have available providers in the registry.

|

||||

To string `yaml:"to" json:"to"`

|

||||

|

||||

// Regex indicates whether the 'from' field should be interpreted as a regular

|

||||

// expression for matching model names. When true, this mapping is evaluated

|

||||

// after exact matches and in the order provided. Defaults to false (exact match).

|

||||

Regex bool `yaml:"regex,omitempty" json:"regex,omitempty"`

|

||||

}

|

||||

|

||||

// AmpCode groups Amp CLI integration settings including upstream routing,

|

||||

|

||||

@@ -22,6 +22,21 @@ type SDKConfig struct {

|

||||

|

||||

// Access holds request authentication provider configuration.

|

||||

Access AccessConfig `yaml:"auth,omitempty" json:"auth,omitempty"`

|

||||

|

||||

// Streaming configures server-side streaming behavior (keep-alives and safe bootstrap retries).

|

||||

Streaming StreamingConfig `yaml:"streaming" json:"streaming"`

|

||||

}

|

||||

|

||||

// StreamingConfig holds server streaming behavior configuration.

|

||||

type StreamingConfig struct {

|

||||

// KeepAliveSeconds controls how often the server emits SSE heartbeats (": keep-alive\n\n").

|

||||

// nil means default (15 seconds). <= 0 disables keep-alives.

|

||||

KeepAliveSeconds *int `yaml:"keepalive-seconds,omitempty" json:"keepalive-seconds,omitempty"`

|

||||

|

||||

// BootstrapRetries controls how many times the server may retry a streaming request before any bytes are sent,

|

||||

// to allow auth rotation / transient recovery.

|

||||

// nil means default (2). 0 disables bootstrap retries.

|

||||

BootstrapRetries *int `yaml:"bootstrap-retries,omitempty" json:"bootstrap-retries,omitempty"`

|

||||

}

|

||||

|

||||

// AccessConfig groups request authentication providers.

|

||||

|

||||

@@ -7,6 +7,7 @@ import (

|

||||

"fmt"

|

||||

"net/http"

|

||||

"runtime/debug"

|

||||

"strings"

|

||||

"time"

|

||||

|

||||

"github.com/gin-gonic/gin"

|

||||

@@ -14,11 +15,24 @@ import (

|

||||

log "github.com/sirupsen/logrus"

|

||||

)

|

||||

|

||||

// aiAPIPrefixes defines path prefixes for AI API requests that should have request ID tracking.

|

||||

var aiAPIPrefixes = []string{

|

||||

"/v1/chat/completions",

|

||||

"/v1/completions",

|

||||

"/v1/messages",

|

||||

"/v1/responses",

|

||||

"/v1beta/models/",

|

||||

"/api/provider/",

|

||||

}

|

||||

|

||||

const skipGinLogKey = "__gin_skip_request_logging__"

|

||||

|

||||

// GinLogrusLogger returns a Gin middleware handler that logs HTTP requests and responses

|

||||

// using logrus. It captures request details including method, path, status code, latency,

|

||||

// client IP, and any error messages, formatting them in a Gin-style log format.

|

||||

// client IP, and any error messages. Request ID is only added for AI API requests.

|

||||

//

|

||||

// Output format (AI API): [2025-12-23 20:14:10] [info ] | a1b2c3d4 | 200 | 23.559s | ...

|

||||

// Output format (others): [2025-12-23 20:14:10] [info ] | -------- | 200 | 23.559s | ...

|

||||

//

|

||||

// Returns:

|

||||

// - gin.HandlerFunc: A middleware handler for request logging

|

||||

@@ -28,6 +42,15 @@ func GinLogrusLogger() gin.HandlerFunc {

|

||||

path := c.Request.URL.Path

|

||||

raw := util.MaskSensitiveQuery(c.Request.URL.RawQuery)

|

||||

|

||||

// Only generate request ID for AI API paths

|

||||

var requestID string

|

||||

if isAIAPIPath(path) {

|

||||

requestID = GenerateRequestID()

|

||||

SetGinRequestID(c, requestID)

|

||||

ctx := WithRequestID(c.Request.Context(), requestID)

|

||||

c.Request = c.Request.WithContext(ctx)

|

||||

}

|

||||

|

||||

c.Next()

|

||||

|

||||

if shouldSkipGinRequestLogging(c) {

|

||||

@@ -49,23 +72,38 @@ func GinLogrusLogger() gin.HandlerFunc {

|

||||

clientIP := c.ClientIP()

|

||||

method := c.Request.Method

|

||||

errorMessage := c.Errors.ByType(gin.ErrorTypePrivate).String()

|

||||

timestamp := time.Now().Format("2006/01/02 - 15:04:05")

|

||||

logLine := fmt.Sprintf("[GIN] %s | %3d | %13v | %15s | %-7s \"%s\"", timestamp, statusCode, latency, clientIP, method, path)

|

||||

|

||||

if requestID == "" {

|

||||

requestID = "--------"

|

||||

}

|

||||

logLine := fmt.Sprintf("%3d | %13v | %15s | %-7s \"%s\"", statusCode, latency, clientIP, method, path)

|

||||

if errorMessage != "" {

|

||||

logLine = logLine + " | " + errorMessage

|

||||

}

|

||||

|

||||

entry := log.WithField("request_id", requestID)

|

||||

|

||||

switch {

|

||||

case statusCode >= http.StatusInternalServerError:

|

||||

log.Error(logLine)

|

||||

entry.Error(logLine)

|

||||

case statusCode >= http.StatusBadRequest:

|

||||

log.Warn(logLine)

|

||||

entry.Warn(logLine)

|

||||

default:

|

||||

log.Info(logLine)

|

||||

entry.Info(logLine)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// isAIAPIPath checks if the given path is an AI API endpoint that should have request ID tracking.

|

||||

func isAIAPIPath(path string) bool {

|

||||

for _, prefix := range aiAPIPrefixes {

|

||||

if strings.HasPrefix(path, prefix) {

|

||||

return true

|

||||

}

|

||||

}

|

||||

return false

|

||||

}

|

||||

|

||||

// GinLogrusRecovery returns a Gin middleware handler that recovers from panics and logs

|

||||

// them using logrus. When a panic occurs, it captures the panic value, stack trace,

|

||||

// and request path, then returns a 500 Internal Server Error response to the client.

|

||||

|

||||

@@ -24,7 +24,8 @@ var (

|

||||

)

|

||||

|

||||

// LogFormatter defines a custom log format for logrus.

|

||||

// This formatter adds timestamp, level, and source location to each log entry.

|

||||

// This formatter adds timestamp, level, request ID, and source location to each log entry.

|

||||

// Format: [2025-12-23 20:14:04] [debug] [manager.go:524] | a1b2c3d4 | Use API key sk-9...0RHO for model gpt-5.2

|

||||

type LogFormatter struct{}

|

||||

|

||||

// Format renders a single log entry with custom formatting.

|

||||

@@ -39,11 +40,22 @@ func (m *LogFormatter) Format(entry *log.Entry) ([]byte, error) {

|

||||

timestamp := entry.Time.Format("2006-01-02 15:04:05")

|

||||

message := strings.TrimRight(entry.Message, "\r\n")

|

||||

|

||||

reqID := "--------"

|

||||

if id, ok := entry.Data["request_id"].(string); ok && id != "" {

|

||||

reqID = id

|

||||

}

|

||||

|

||||

level := entry.Level.String()

|

||||

if level == "warning" {

|

||||

level = "warn"

|

||||

}

|

||||

levelStr := fmt.Sprintf("%-5s", level)

|

||||

|

||||

var formatted string

|

||||

if entry.Caller != nil {

|

||||

formatted = fmt.Sprintf("[%s] [%s] [%s:%d] %s\n", timestamp, entry.Level, filepath.Base(entry.Caller.File), entry.Caller.Line, message)

|

||||

formatted = fmt.Sprintf("[%s] [%s] [%s] [%s:%d] %s\n", timestamp, reqID, levelStr, filepath.Base(entry.Caller.File), entry.Caller.Line, message)

|

||||

} else {

|

||||

formatted = fmt.Sprintf("[%s] [%s] %s\n", timestamp, entry.Level, message)

|

||||

formatted = fmt.Sprintf("[%s] [%s] [%s] %s\n", timestamp, reqID, levelStr, message)

|

||||

}

|

||||

buffer.WriteString(formatted)

|

||||

|

||||

|

||||

@@ -43,10 +43,11 @@ type RequestLogger interface {

|

||||

// - response: The raw response data

|

||||

// - apiRequest: The API request data

|

||||

// - apiResponse: The API response data

|

||||

// - requestID: Optional request ID for log file naming

|

||||

//

|

||||

// Returns:

|

||||

// - error: An error if logging fails, nil otherwise

|

||||

LogRequest(url, method string, requestHeaders map[string][]string, body []byte, statusCode int, responseHeaders map[string][]string, response, apiRequest, apiResponse []byte, apiResponseErrors []*interfaces.ErrorMessage) error

|

||||

LogRequest(url, method string, requestHeaders map[string][]string, body []byte, statusCode int, responseHeaders map[string][]string, response, apiRequest, apiResponse []byte, apiResponseErrors []*interfaces.ErrorMessage, requestID string) error

|

||||

|

||||

// LogStreamingRequest initiates logging for a streaming request and returns a writer for chunks.

|

||||

//

|

||||

@@ -55,11 +56,12 @@ type RequestLogger interface {

|

||||

// - method: The HTTP method

|

||||

// - headers: The request headers

|

||||

// - body: The request body

|

||||

// - requestID: Optional request ID for log file naming

|

||||

//

|

||||

// Returns:

|

||||

// - StreamingLogWriter: A writer for streaming response chunks

|

||||

// - error: An error if logging initialization fails, nil otherwise

|

||||

LogStreamingRequest(url, method string, headers map[string][]string, body []byte) (StreamingLogWriter, error)

|

||||

LogStreamingRequest(url, method string, headers map[string][]string, body []byte, requestID string) (StreamingLogWriter, error)

|

||||

|

||||

// IsEnabled returns whether request logging is currently enabled.

|

||||

//

|

||||

@@ -177,20 +179,21 @@ func (l *FileRequestLogger) SetEnabled(enabled bool) {

|

||||

// - response: The raw response data

|

||||

// - apiRequest: The API request data

|

||||

// - apiResponse: The API response data

|

||||

// - requestID: Optional request ID for log file naming

|

||||

//

|

||||

// Returns:

|

||||

// - error: An error if logging fails, nil otherwise

|

||||

func (l *FileRequestLogger) LogRequest(url, method string, requestHeaders map[string][]string, body []byte, statusCode int, responseHeaders map[string][]string, response, apiRequest, apiResponse []byte, apiResponseErrors []*interfaces.ErrorMessage) error {

|

||||

return l.logRequest(url, method, requestHeaders, body, statusCode, responseHeaders, response, apiRequest, apiResponse, apiResponseErrors, false)

|

||||

func (l *FileRequestLogger) LogRequest(url, method string, requestHeaders map[string][]string, body []byte, statusCode int, responseHeaders map[string][]string, response, apiRequest, apiResponse []byte, apiResponseErrors []*interfaces.ErrorMessage, requestID string) error {

|

||||

return l.logRequest(url, method, requestHeaders, body, statusCode, responseHeaders, response, apiRequest, apiResponse, apiResponseErrors, false, requestID)

|

||||

}

|

||||

|

||||

// LogRequestWithOptions logs a request with optional forced logging behavior.

|

||||

// The force flag allows writing error logs even when regular request logging is disabled.

|

||||

func (l *FileRequestLogger) LogRequestWithOptions(url, method string, requestHeaders map[string][]string, body []byte, statusCode int, responseHeaders map[string][]string, response, apiRequest, apiResponse []byte, apiResponseErrors []*interfaces.ErrorMessage, force bool) error {

|

||||

return l.logRequest(url, method, requestHeaders, body, statusCode, responseHeaders, response, apiRequest, apiResponse, apiResponseErrors, force)

|

||||

func (l *FileRequestLogger) LogRequestWithOptions(url, method string, requestHeaders map[string][]string, body []byte, statusCode int, responseHeaders map[string][]string, response, apiRequest, apiResponse []byte, apiResponseErrors []*interfaces.ErrorMessage, force bool, requestID string) error {

|

||||

return l.logRequest(url, method, requestHeaders, body, statusCode, responseHeaders, response, apiRequest, apiResponse, apiResponseErrors, force, requestID)

|

||||

}

|

||||

|

||||

func (l *FileRequestLogger) logRequest(url, method string, requestHeaders map[string][]string, body []byte, statusCode int, responseHeaders map[string][]string, response, apiRequest, apiResponse []byte, apiResponseErrors []*interfaces.ErrorMessage, force bool) error {

|

||||

func (l *FileRequestLogger) logRequest(url, method string, requestHeaders map[string][]string, body []byte, statusCode int, responseHeaders map[string][]string, response, apiRequest, apiResponse []byte, apiResponseErrors []*interfaces.ErrorMessage, force bool, requestID string) error {

|

||||

if !l.enabled && !force {

|

||||

return nil

|

||||

}

|

||||

@@ -200,10 +203,10 @@ func (l *FileRequestLogger) logRequest(url, method string, requestHeaders map[st

|

||||

return fmt.Errorf("failed to create logs directory: %w", errEnsure)

|

||||

}

|

||||

|

||||

// Generate filename

|

||||

filename := l.generateFilename(url)

|

||||

// Generate filename with request ID

|

||||

filename := l.generateFilename(url, requestID)

|

||||

if force && !l.enabled {

|

||||

filename = l.generateErrorFilename(url)

|

||||

filename = l.generateErrorFilename(url, requestID)

|

||||

}

|

||||

filePath := filepath.Join(l.logsDir, filename)

|

||||

|

||||

@@ -271,11 +274,12 @@ func (l *FileRequestLogger) logRequest(url, method string, requestHeaders map[st

|

||||

// - method: The HTTP method

|

||||

// - headers: The request headers

|

||||

// - body: The request body

|

||||

// - requestID: Optional request ID for log file naming

|

||||

//

|

||||

// Returns:

|

||||

// - StreamingLogWriter: A writer for streaming response chunks

|

||||

// - error: An error if logging initialization fails, nil otherwise

|

||||

func (l *FileRequestLogger) LogStreamingRequest(url, method string, headers map[string][]string, body []byte) (StreamingLogWriter, error) {

|

||||

func (l *FileRequestLogger) LogStreamingRequest(url, method string, headers map[string][]string, body []byte, requestID string) (StreamingLogWriter, error) {

|

||||

if !l.enabled {

|

||||

return &NoOpStreamingLogWriter{}, nil

|

||||

}

|

||||

@@ -285,8 +289,8 @@ func (l *FileRequestLogger) LogStreamingRequest(url, method string, headers map[

|

||||

return nil, fmt.Errorf("failed to create logs directory: %w", err)

|

||||

}

|

||||

|

||||

// Generate filename

|

||||

filename := l.generateFilename(url)

|

||||

// Generate filename with request ID

|

||||

filename := l.generateFilename(url, requestID)

|

||||

filePath := filepath.Join(l.logsDir, filename)

|

||||

|

||||

requestHeaders := make(map[string][]string, len(headers))

|

||||

@@ -330,8 +334,8 @@ func (l *FileRequestLogger) LogStreamingRequest(url, method string, headers map[

|

||||

}

|

||||

|

||||

// generateErrorFilename creates a filename with an error prefix to differentiate forced error logs.

|

||||

func (l *FileRequestLogger) generateErrorFilename(url string) string {

|

||||

return fmt.Sprintf("error-%s", l.generateFilename(url))

|

||||

func (l *FileRequestLogger) generateErrorFilename(url string, requestID ...string) string {

|

||||

return fmt.Sprintf("error-%s", l.generateFilename(url, requestID...))

|

||||

}

|

||||

|

||||

// ensureLogsDir creates the logs directory if it doesn't exist.

|

||||

@@ -346,13 +350,15 @@ func (l *FileRequestLogger) ensureLogsDir() error {

|

||||

}

|

||||

|

||||

// generateFilename creates a sanitized filename from the URL path and current timestamp.

|

||||

// Format: v1-responses-2025-12-23T195811-a1b2c3d4.log

|

||||

//

|

||||

// Parameters:

|

||||

// - url: The request URL

|

||||

// - requestID: Optional request ID to include in filename

|

||||

//

|

||||

// Returns:

|

||||

// - string: A sanitized filename for the log file

|

||||

func (l *FileRequestLogger) generateFilename(url string) string {

|

||||

func (l *FileRequestLogger) generateFilename(url string, requestID ...string) string {

|

||||

// Extract path from URL

|

||||

path := url

|

||||

if strings.Contains(url, "?") {

|

||||

@@ -368,12 +374,18 @@ func (l *FileRequestLogger) generateFilename(url string) string {

|

||||

sanitized := l.sanitizeForFilename(path)

|

||||

|

||||

// Add timestamp

|

||||

timestamp := time.Now().Format("2006-01-02T150405-.000000000")

|

||||

timestamp = strings.Replace(timestamp, ".", "", -1)

|

||||

timestamp := time.Now().Format("2006-01-02T150405")

|

||||

|

||||

id := requestLogID.Add(1)

|

||||

// Use request ID if provided, otherwise use sequential ID

|

||||

var idPart string

|

||||

if len(requestID) > 0 && requestID[0] != "" {

|

||||

idPart = requestID[0]

|

||||

} else {

|

||||

id := requestLogID.Add(1)

|

||||

idPart = fmt.Sprintf("%d", id)

|

||||

}

|

||||

|

||||

return fmt.Sprintf("%s-%s-%d.log", sanitized, timestamp, id)

|

||||

return fmt.Sprintf("%s-%s-%s.log", sanitized, timestamp, idPart)

|

||||

}

|

||||

|

||||

// sanitizeForFilename replaces characters that are not safe for filenames.

|

||||

|

||||

61

internal/logging/requestid.go

Normal file

61

internal/logging/requestid.go

Normal file

@@ -0,0 +1,61 @@

|

||||

package logging

|

||||

|

||||

import (

|

||||

"context"

|

||||

"crypto/rand"

|

||||

"encoding/hex"

|

||||

|

||||

"github.com/gin-gonic/gin"

|

||||

)

|

||||

|

||||

// requestIDKey is the context key for storing/retrieving request IDs.

|

||||

type requestIDKey struct{}

|

||||

|

||||

// ginRequestIDKey is the Gin context key for request IDs.

|

||||

const ginRequestIDKey = "__request_id__"

|

||||

|

||||

// GenerateRequestID creates a new 8-character hex request ID.

|

||||

func GenerateRequestID() string {

|

||||

b := make([]byte, 4)

|

||||

if _, err := rand.Read(b); err != nil {

|

||||

return "00000000"

|

||||

}

|

||||

return hex.EncodeToString(b)

|

||||

}

|

||||

|

||||

// WithRequestID returns a new context with the request ID attached.

|

||||

func WithRequestID(ctx context.Context, requestID string) context.Context {

|

||||

return context.WithValue(ctx, requestIDKey{}, requestID)

|

||||

}

|

||||

|

||||

// GetRequestID retrieves the request ID from the context.

|

||||

// Returns empty string if not found.

|

||||

func GetRequestID(ctx context.Context) string {

|

||||

if ctx == nil {

|

||||

return ""

|

||||

}

|

||||

if id, ok := ctx.Value(requestIDKey{}).(string); ok {

|

||||

return id

|

||||

}

|

||||

return ""

|

||||

}

|

||||

|

||||

// SetGinRequestID stores the request ID in the Gin context.

|

||||

func SetGinRequestID(c *gin.Context, requestID string) {

|

||||

if c != nil {

|

||||

c.Set(ginRequestIDKey, requestID)

|

||||

}

|

||||

}

|

||||

|

||||

// GetGinRequestID retrieves the request ID from the Gin context.

|

||||

func GetGinRequestID(c *gin.Context) string {

|

||||

if c == nil {

|

||||

return ""

|

||||

}

|

||||

if id, exists := c.Get(ginRequestIDKey); exists {

|

||||

if s, ok := id.(string); ok {

|

||||

return s

|

||||

}

|

||||

}

|

||||

return ""

|

||||

}

|

||||

@@ -727,6 +727,7 @@ func GetIFlowModels() []*ModelInfo {

|

||||

{ID: "qwen3-max-preview", DisplayName: "Qwen3-Max-Preview", Description: "Qwen3 Max preview build", Created: 1757030400},

|

||||

{ID: "kimi-k2-0905", DisplayName: "Kimi-K2-Instruct-0905", Description: "Moonshot Kimi K2 instruct 0905", Created: 1757030400},

|

||||

{ID: "glm-4.6", DisplayName: "GLM-4.6", Description: "Zhipu GLM 4.6 general model", Created: 1759190400, Thinking: iFlowThinkingSupport},

|

||||

{ID: "glm-4.7", DisplayName: "GLM-4.7", Description: "Zhipu GLM 4.7 general model", Created: 1766448000, Thinking: iFlowThinkingSupport},

|

||||

{ID: "kimi-k2", DisplayName: "Kimi-K2", Description: "Moonshot Kimi K2 general model", Created: 1752192000},

|

||||

{ID: "kimi-k2-thinking", DisplayName: "Kimi-K2-Thinking", Description: "Moonshot Kimi K2 thinking model", Created: 1762387200},

|

||||

{ID: "deepseek-v3.2-chat", DisplayName: "DeepSeek-V3.2", Description: "DeepSeek V3.2 Chat", Created: 1764576000},

|

||||

@@ -740,6 +741,7 @@ func GetIFlowModels() []*ModelInfo {

|

||||

{ID: "qwen3-235b-a22b-instruct", DisplayName: "Qwen3-235B-A22B-Instruct", Description: "Qwen3 235B A22B Instruct", Created: 1753401600},

|

||||

{ID: "qwen3-235b", DisplayName: "Qwen3-235B-A22B", Description: "Qwen3 235B A22B", Created: 1753401600},

|

||||

{ID: "minimax-m2", DisplayName: "MiniMax-M2", Description: "MiniMax M2", Created: 1758672000},

|

||||

{ID: "minimax-m2.1", DisplayName: "MiniMax-M2.1", Description: "MiniMax M2.1", Created: 1766448000},

|

||||

}

|

||||

models := make([]*ModelInfo, 0, len(entries))

|

||||

for _, entry := range entries {

|

||||

|

||||

@@ -17,6 +17,7 @@ import (

|

||||

"net/url"

|

||||

"strconv"

|

||||

"strings"

|

||||

"sync"

|

||||

"time"

|

||||

|

||||

"github.com/google/uuid"

|

||||

@@ -41,12 +42,15 @@ const (

|

||||

antigravityModelsPath = "/v1internal:fetchAvailableModels"

|

||||

antigravityClientID = "1071006060591-tmhssin2h21lcre235vtolojh4g403ep.apps.googleusercontent.com"

|

||||

antigravityClientSecret = "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"

|

||||

defaultAntigravityAgent = "antigravity/1.11.5 windows/amd64"

|

||||

defaultAntigravityAgent = "antigravity/1.104.0 darwin/arm64"

|

||||

antigravityAuthType = "antigravity"

|

||||

refreshSkew = 3000 * time.Second

|

||||

)

|

||||

|

||||

var randSource = rand.New(rand.NewSource(time.Now().UnixNano()))

|

||||

var (

|

||||

randSource = rand.New(rand.NewSource(time.Now().UnixNano()))

|

||||

randSourceMutex sync.Mutex

|

||||

)

|

||||

|

||||

// AntigravityExecutor proxies requests to the antigravity upstream.

|

||||

type AntigravityExecutor struct {

|

||||

@@ -1224,7 +1228,9 @@ func generateRequestID() string {

|

||||

}

|

||||

|

||||

func generateSessionID() string {

|

||||

randSourceMutex.Lock()

|

||||

n := randSource.Int63n(9_000_000_000_000_000_000)

|

||||

randSourceMutex.Unlock()

|

||||

return "-" + strconv.FormatInt(n, 10)

|

||||

}

|

||||

|

||||

@@ -1248,8 +1254,10 @@ func generateStableSessionID(payload []byte) string {

|

||||

func generateProjectID() string {

|

||||

adjectives := []string{"useful", "bright", "swift", "calm", "bold"}

|

||||

nouns := []string{"fuze", "wave", "spark", "flow", "core"}

|

||||

randSourceMutex.Lock()

|

||||

adj := adjectives[randSource.Intn(len(adjectives))]

|

||||

noun := nouns[randSource.Intn(len(nouns))]

|

||||

randSourceMutex.Unlock()

|

||||

randomPart := strings.ToLower(uuid.NewString())[:5]

|

||||

return adj + "-" + noun + "-" + randomPart

|

||||

}

|

||||

|

||||

@@ -275,6 +275,20 @@ func parseClaudeStreamUsage(line []byte) (usage.Detail, bool) {

|

||||

return detail, true

|

||||

}

|

||||

|

||||

func parseGeminiFamilyUsageDetail(node gjson.Result) usage.Detail {

|

||||

detail := usage.Detail{

|

||||

InputTokens: node.Get("promptTokenCount").Int(),

|

||||

OutputTokens: node.Get("candidatesTokenCount").Int(),

|

||||

ReasoningTokens: node.Get("thoughtsTokenCount").Int(),

|

||||

TotalTokens: node.Get("totalTokenCount").Int(),

|

||||

CachedTokens: node.Get("cachedContentTokenCount").Int(),

|

||||

}

|

||||

if detail.TotalTokens == 0 {

|

||||

detail.TotalTokens = detail.InputTokens + detail.OutputTokens + detail.ReasoningTokens

|

||||

}

|

||||

return detail

|

||||

}

|

||||

|

||||

func parseGeminiCLIUsage(data []byte) usage.Detail {

|

||||

usageNode := gjson.ParseBytes(data)

|

||||

node := usageNode.Get("response.usageMetadata")

|

||||

@@ -284,16 +298,7 @@ func parseGeminiCLIUsage(data []byte) usage.Detail {

|

||||

if !node.Exists() {

|

||||

return usage.Detail{}

|

||||

}

|

||||

detail := usage.Detail{

|

||||

InputTokens: node.Get("promptTokenCount").Int(),

|

||||

OutputTokens: node.Get("candidatesTokenCount").Int(),

|

||||

ReasoningTokens: node.Get("thoughtsTokenCount").Int(),

|

||||

TotalTokens: node.Get("totalTokenCount").Int(),

|

||||

}

|

||||

if detail.TotalTokens == 0 {

|

||||

detail.TotalTokens = detail.InputTokens + detail.OutputTokens + detail.ReasoningTokens

|

||||

}

|

||||

return detail

|

||||

return parseGeminiFamilyUsageDetail(node)

|

||||

}

|

||||

|

||||

func parseGeminiUsage(data []byte) usage.Detail {

|

||||

@@ -305,16 +310,7 @@ func parseGeminiUsage(data []byte) usage.Detail {

|

||||

if !node.Exists() {

|

||||

return usage.Detail{}

|

||||

}

|

||||

detail := usage.Detail{

|

||||

InputTokens: node.Get("promptTokenCount").Int(),

|

||||

OutputTokens: node.Get("candidatesTokenCount").Int(),

|

||||

ReasoningTokens: node.Get("thoughtsTokenCount").Int(),

|

||||

TotalTokens: node.Get("totalTokenCount").Int(),

|

||||

}

|

||||

if detail.TotalTokens == 0 {

|

||||

detail.TotalTokens = detail.InputTokens + detail.OutputTokens + detail.ReasoningTokens

|

||||

}

|

||||

return detail

|

||||

return parseGeminiFamilyUsageDetail(node)

|

||||

}

|

||||

|

||||

func parseGeminiStreamUsage(line []byte) (usage.Detail, bool) {

|

||||

@@ -329,16 +325,7 @@ func parseGeminiStreamUsage(line []byte) (usage.Detail, bool) {

|

||||

if !node.Exists() {

|

||||

return usage.Detail{}, false

|

||||

}

|

||||

detail := usage.Detail{

|

||||

InputTokens: node.Get("promptTokenCount").Int(),

|

||||

OutputTokens: node.Get("candidatesTokenCount").Int(),

|

||||

ReasoningTokens: node.Get("thoughtsTokenCount").Int(),

|

||||

TotalTokens: node.Get("totalTokenCount").Int(),

|

||||

}

|

||||

if detail.TotalTokens == 0 {

|

||||

detail.TotalTokens = detail.InputTokens + detail.OutputTokens + detail.ReasoningTokens

|

||||

}

|

||||

return detail, true

|

||||

return parseGeminiFamilyUsageDetail(node), true

|

||||

}

|

||||

|

||||

func parseGeminiCLIStreamUsage(line []byte) (usage.Detail, bool) {

|

||||

@@ -353,16 +340,7 @@ func parseGeminiCLIStreamUsage(line []byte) (usage.Detail, bool) {

|

||||

if !node.Exists() {

|

||||

return usage.Detail{}, false

|

||||

}

|

||||

detail := usage.Detail{

|

||||

InputTokens: node.Get("promptTokenCount").Int(),

|

||||

OutputTokens: node.Get("candidatesTokenCount").Int(),

|

||||

ReasoningTokens: node.Get("thoughtsTokenCount").Int(),

|

||||

TotalTokens: node.Get("totalTokenCount").Int(),

|

||||

}

|

||||

if detail.TotalTokens == 0 {

|

||||

detail.TotalTokens = detail.InputTokens + detail.OutputTokens + detail.ReasoningTokens

|

||||

}

|

||||

return detail, true

|

||||

return parseGeminiFamilyUsageDetail(node), true

|

||||

}

|

||||

|

||||

func parseAntigravityUsage(data []byte) usage.Detail {

|

||||

@@ -377,16 +355,7 @@ func parseAntigravityUsage(data []byte) usage.Detail {

|

||||

if !node.Exists() {

|

||||

return usage.Detail{}

|

||||

}

|

||||

detail := usage.Detail{

|

||||

InputTokens: node.Get("promptTokenCount").Int(),

|

||||

OutputTokens: node.Get("candidatesTokenCount").Int(),

|

||||

ReasoningTokens: node.Get("thoughtsTokenCount").Int(),

|

||||

TotalTokens: node.Get("totalTokenCount").Int(),

|

||||

}

|

||||

if detail.TotalTokens == 0 {

|

||||

detail.TotalTokens = detail.InputTokens + detail.OutputTokens + detail.ReasoningTokens

|

||||

}

|

||||

return detail

|

||||

return parseGeminiFamilyUsageDetail(node)

|

||||

}

|

||||

|

||||

func parseAntigravityStreamUsage(line []byte) (usage.Detail, bool) {

|

||||

@@ -404,16 +373,7 @@ func parseAntigravityStreamUsage(line []byte) (usage.Detail, bool) {

|

||||

if !node.Exists() {

|

||||

return usage.Detail{}, false

|

||||

}

|

||||

detail := usage.Detail{

|

||||

InputTokens: node.Get("promptTokenCount").Int(),

|

||||

OutputTokens: node.Get("candidatesTokenCount").Int(),

|

||||

ReasoningTokens: node.Get("thoughtsTokenCount").Int(),

|

||||

TotalTokens: node.Get("totalTokenCount").Int(),

|

||||

}

|

||||

if detail.TotalTokens == 0 {

|

||||

detail.TotalTokens = detail.InputTokens + detail.OutputTokens + detail.ReasoningTokens

|

||||

}

|

||||

return detail, true

|

||||

return parseGeminiFamilyUsageDetail(node), true

|

||||

}

|

||||

|

||||

var stopChunkWithoutUsage sync.Map

|

||||

|

||||

@@ -35,6 +35,7 @@ type Params struct {

|

||||

CandidatesTokenCount int64 // Cached candidate token count from usage metadata

|

||||

ThoughtsTokenCount int64 // Cached thinking token count from usage metadata

|

||||

TotalTokenCount int64 // Cached total token count from usage metadata

|

||||

CachedTokenCount int64 // Cached content token count (indicates prompt caching)

|

||||

HasSentFinalEvents bool // Indicates if final content/message events have been sent

|

||||

HasToolUse bool // Indicates if tool use was observed in the stream

|

||||

HasContent bool // Tracks whether any content (text, thinking, or tool use) has been output

|

||||

@@ -270,7 +271,8 @@ func ConvertAntigravityResponseToClaude(_ context.Context, _ string, originalReq

|

||||

|

||||

if usageResult := gjson.GetBytes(rawJSON, "response.usageMetadata"); usageResult.Exists() {

|

||||

params.HasUsageMetadata = true

|

||||

params.PromptTokenCount = usageResult.Get("promptTokenCount").Int()

|

||||

params.CachedTokenCount = usageResult.Get("cachedContentTokenCount").Int()

|

||||

params.PromptTokenCount = usageResult.Get("promptTokenCount").Int() - params.CachedTokenCount

|

||||

params.CandidatesTokenCount = usageResult.Get("candidatesTokenCount").Int()

|

||||

params.ThoughtsTokenCount = usageResult.Get("thoughtsTokenCount").Int()

|

||||

params.TotalTokenCount = usageResult.Get("totalTokenCount").Int()

|

||||

@@ -322,6 +324,14 @@ func appendFinalEvents(params *Params, output *string, force bool) {

|

||||

*output = *output + "event: message_delta\n"

|

||||

*output = *output + "data: "

|

||||

delta := fmt.Sprintf(`{"type":"message_delta","delta":{"stop_reason":"%s","stop_sequence":null},"usage":{"input_tokens":%d,"output_tokens":%d}}`, stopReason, params.PromptTokenCount, usageOutputTokens)

|

||||

// Add cache_read_input_tokens if cached tokens are present (indicates prompt caching is working)

|

||||

if params.CachedTokenCount > 0 {

|

||||

var err error

|

||||

delta, err = sjson.Set(delta, "usage.cache_read_input_tokens", params.CachedTokenCount)

|

||||

if err != nil {

|

||||

log.Warnf("antigravity claude response: failed to set cache_read_input_tokens: %v", err)

|

||||

}

|

||||

}

|

||||

*output = *output + delta + "\n\n\n"

|

||||

|

||||

params.HasSentFinalEvents = true

|

||||

@@ -361,6 +371,7 @@ func ConvertAntigravityResponseToClaudeNonStream(_ context.Context, _ string, or

|

||||

candidateTokens := root.Get("response.usageMetadata.candidatesTokenCount").Int()

|

||||

thoughtTokens := root.Get("response.usageMetadata.thoughtsTokenCount").Int()

|

||||

totalTokens := root.Get("response.usageMetadata.totalTokenCount").Int()

|

||||

cachedTokens := root.Get("response.usageMetadata.cachedContentTokenCount").Int()

|

||||

outputTokens := candidateTokens + thoughtTokens

|

||||

if outputTokens == 0 && totalTokens > 0 {

|

||||

outputTokens = totalTokens - promptTokens

|

||||

@@ -374,6 +385,14 @@ func ConvertAntigravityResponseToClaudeNonStream(_ context.Context, _ string, or

|

||||

responseJSON, _ = sjson.Set(responseJSON, "model", root.Get("response.modelVersion").String())

|

||||

responseJSON, _ = sjson.Set(responseJSON, "usage.input_tokens", promptTokens)

|

||||

responseJSON, _ = sjson.Set(responseJSON, "usage.output_tokens", outputTokens)

|

||||

// Add cache_read_input_tokens if cached tokens are present (indicates prompt caching is working)

|

||||

if cachedTokens > 0 {

|

||||

var err error

|

||||

responseJSON, err = sjson.Set(responseJSON, "usage.cache_read_input_tokens", cachedTokens)

|

||||

if err != nil {

|

||||

log.Warnf("antigravity claude response: failed to set cache_read_input_tokens: %v", err)

|

||||

}

|

||||

}

|

||||

|

||||

contentArrayInitialized := false

|

||||

ensureContentArray := func() {

|

||||

|

||||

@@ -249,8 +249,28 @@ func ConvertOpenAIRequestToAntigravity(modelName string, inputRawJSON []byte, _

|

||||

p := 0

|

||||

if content.Type == gjson.String {

|

||||

node, _ = sjson.SetBytes(node, "parts.-1.text", content.String())

|

||||

out, _ = sjson.SetRawBytes(out, "request.contents.-1", node)

|

||||

p++

|

||||

} else if content.IsArray() {

|

||||

// Assistant multimodal content (e.g. text + image) -> single model content with parts

|

||||

for _, item := range content.Array() {

|

||||

switch item.Get("type").String() {

|

||||

case "text":

|

||||

p++

|

||||

case "image_url":

|

||||

// If the assistant returned an inline data URL, preserve it for history fidelity.

|

||||

imageURL := item.Get("image_url.url").String()

|

||||

if len(imageURL) > 5 { // expect data:...

|

||||

pieces := strings.SplitN(imageURL[5:], ";", 2)

|

||||

if len(pieces) == 2 && len(pieces[1]) > 7 {

|

||||

mime := pieces[0]

|

||||

data := pieces[1][7:]

|

||||

node, _ = sjson.SetBytes(node, "parts."+itoa(p)+".inlineData.mime_type", mime)

|

||||

node, _ = sjson.SetBytes(node, "parts."+itoa(p)+".inlineData.data", data)

|

||||

p++

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// Tool calls -> single model content with functionCall parts

|

||||

@@ -305,6 +325,8 @@ func ConvertOpenAIRequestToAntigravity(modelName string, inputRawJSON []byte, _

|

||||

if pp > 0 {

|

||||

out, _ = sjson.SetRawBytes(out, "request.contents.-1", toolNode)

|

||||

}

|

||||

} else {

|

||||

out, _ = sjson.SetRawBytes(out, "request.contents.-1", node)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -13,6 +13,8 @@ import (

|

||||

"sync/atomic"

|

||||

"time"

|

||||

|

||||

log "github.com/sirupsen/logrus"

|

||||

|

||||

. "github.com/router-for-me/CLIProxyAPI/v6/internal/translator/gemini/openai/chat-completions"

|

||||

"github.com/tidwall/gjson"

|

||||

"github.com/tidwall/sjson"

|

||||

@@ -85,18 +87,27 @@ func ConvertAntigravityResponseToOpenAI(_ context.Context, _ string, originalReq

|

||||

|

||||

// Extract and set usage metadata (token counts).

|

||||

if usageResult := gjson.GetBytes(rawJSON, "response.usageMetadata"); usageResult.Exists() {

|

||||

cachedTokenCount := usageResult.Get("cachedContentTokenCount").Int()

|

||||

if candidatesTokenCountResult := usageResult.Get("candidatesTokenCount"); candidatesTokenCountResult.Exists() {

|

||||

template, _ = sjson.Set(template, "usage.completion_tokens", candidatesTokenCountResult.Int())

|

||||

}

|

||||

if totalTokenCountResult := usageResult.Get("totalTokenCount"); totalTokenCountResult.Exists() {

|

||||

template, _ = sjson.Set(template, "usage.total_tokens", totalTokenCountResult.Int())

|

||||

}

|

||||

promptTokenCount := usageResult.Get("promptTokenCount").Int()

|

||||

promptTokenCount := usageResult.Get("promptTokenCount").Int() - cachedTokenCount

|

||||

thoughtsTokenCount := usageResult.Get("thoughtsTokenCount").Int()

|

||||

template, _ = sjson.Set(template, "usage.prompt_tokens", promptTokenCount+thoughtsTokenCount)

|

||||

if thoughtsTokenCount > 0 {

|

||||

template, _ = sjson.Set(template, "usage.completion_tokens_details.reasoning_tokens", thoughtsTokenCount)

|

||||

}

|

||||

// Include cached token count if present (indicates prompt caching is working)

|

||||

if cachedTokenCount > 0 {

|

||||

var err error

|

||||

template, err = sjson.Set(template, "usage.prompt_tokens_details.cached_tokens", cachedTokenCount)

|

||||

if err != nil {

|

||||

log.Warnf("antigravity openai response: failed to set cached_tokens: %v", err)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// Process the main content part of the response.

|

||||

@@ -170,12 +181,14 @@ func ConvertAntigravityResponseToOpenAI(_ context.Context, _ string, originalReq

|

||||

mimeType = "image/png"

|

||||

}

|

||||

imageURL := fmt.Sprintf("data:%s;base64,%s", mimeType, data)

|

||||